In This Edition

1. Quotations to Open On

2. Feature Articles

2.1 PM and SE Integration – Overcoming ‘Conditioned Thinking’ by Martin Griffin

2.2 A Practical Example of the Process to Define a System Functional Architecture by Alfonso Garcia and Jose L. Fernandez

3. Additional Articles

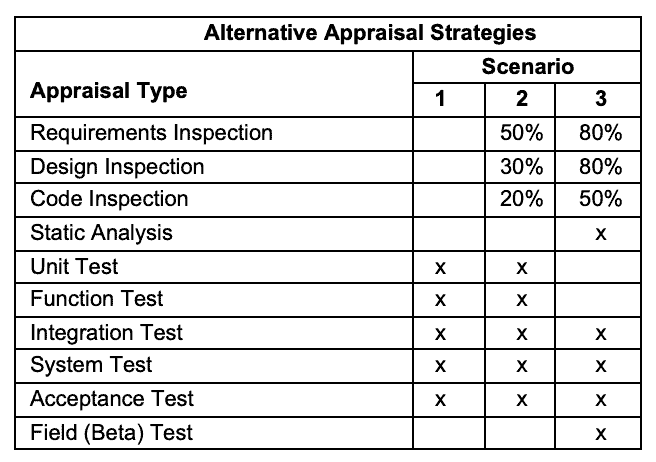

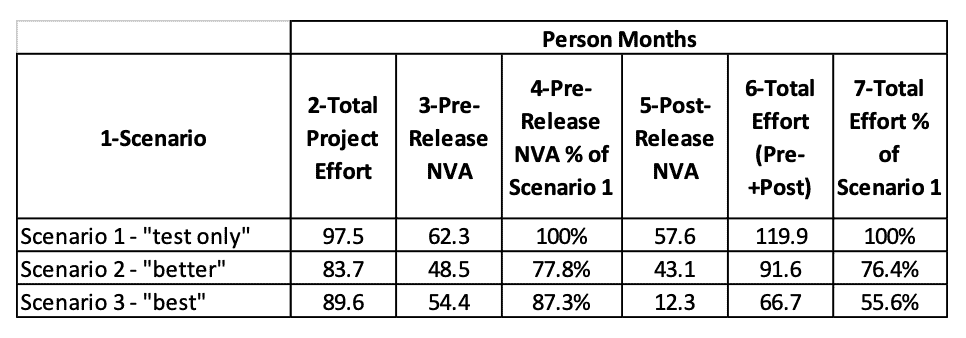

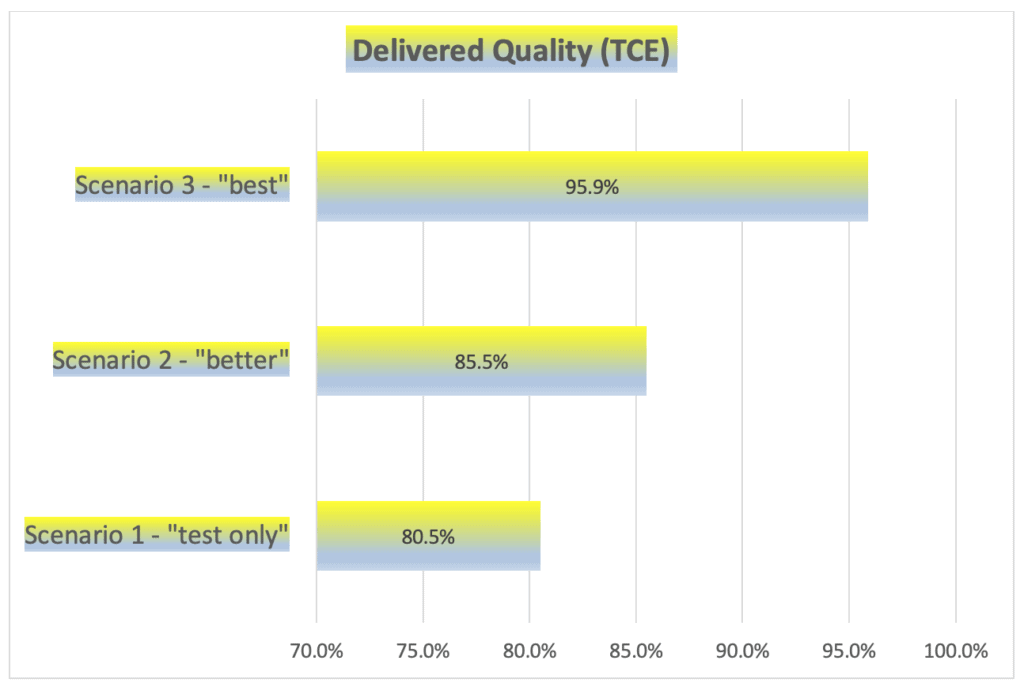

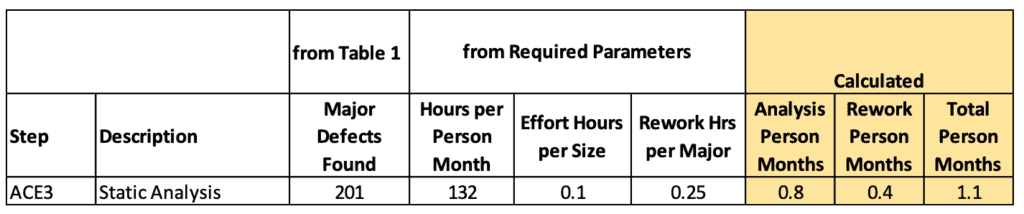

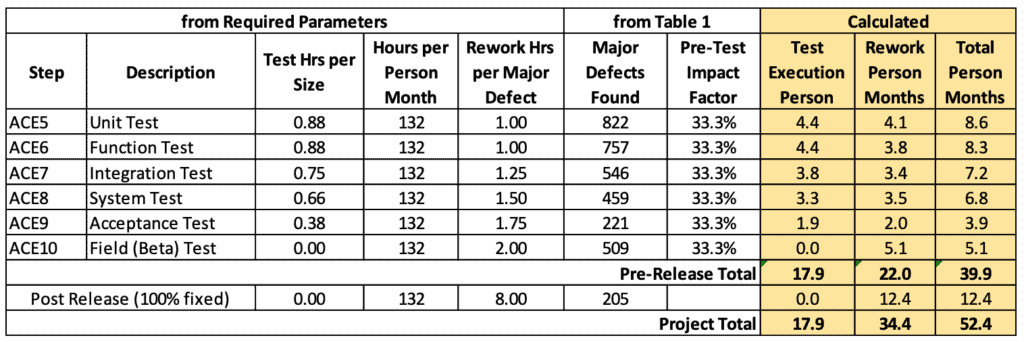

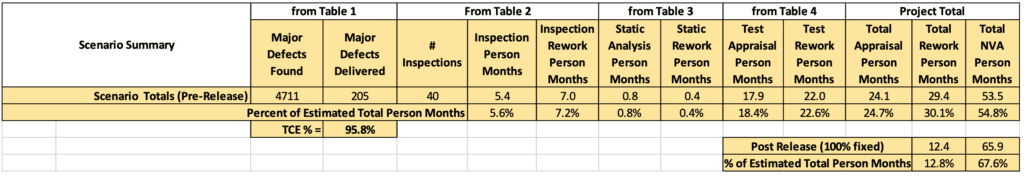

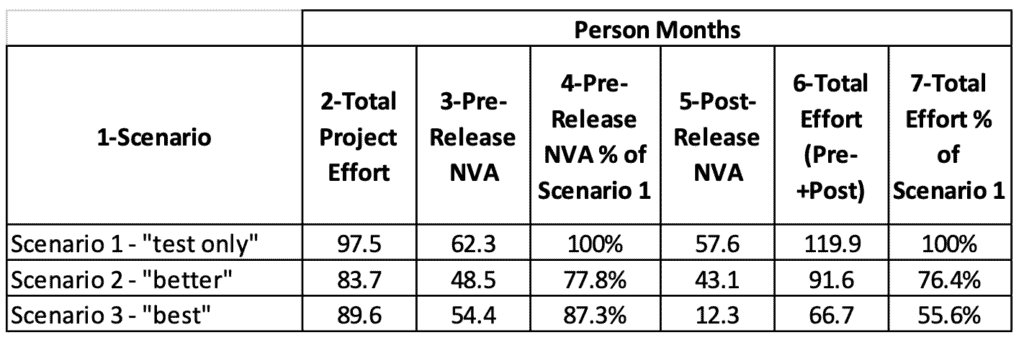

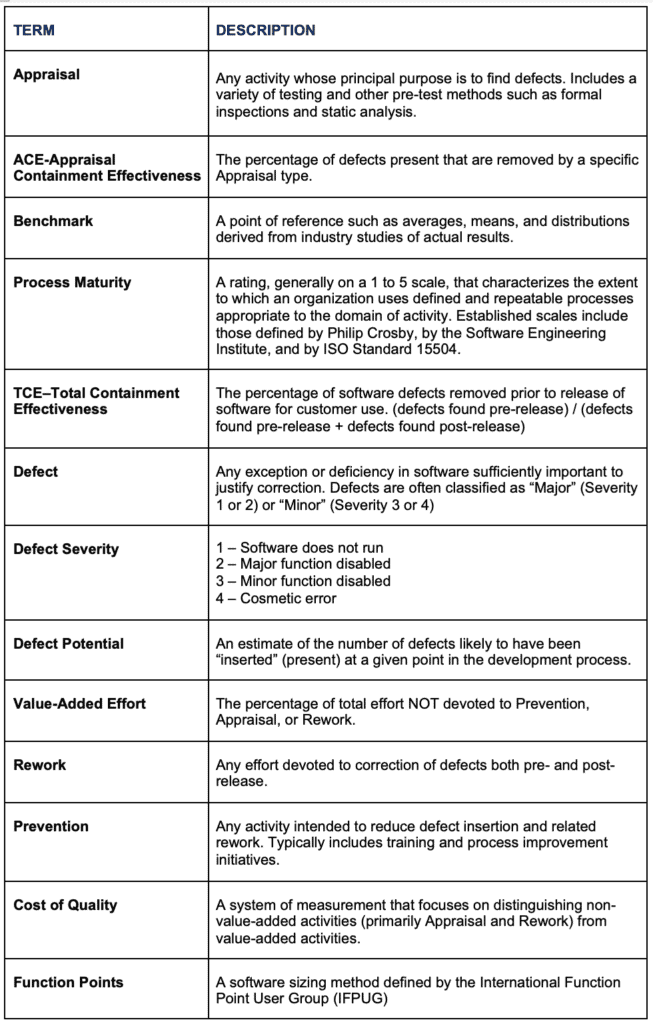

3.1 An Economic Analysis of Software Defect Removal Methods by Gary A. Gack

3.2 Looking back: 2020-The Year-In-Review – and Looking forward: in 2021 Things WILL get Better! by Mark Evans

4. Systems Engineering News

4.1 The PPI-INCOSE Systems Engineering Tools Database (SETDB) is now live!

4.2 SysML_v2 Schedule

4.3 Systems Engineering Internship at ISAE-SUPAERO

4.4 INCOSE 2020 Product of the Year Award

4.5 Dr. Keith Willet Discusses Systems Engineering the Conditions of Possibility

4.6 Seminar Presentations Concerning Systems Engineering Concepts

4.7 Upcoming Open Training on Arcadia and Capella

4.8 INCOSE Award Nominations

5. Featured Organizations

5.1 Centre for Systems Engineering and Innovation – Imperial College London

5.2 World Organization of Societal Systems Engineering (WOSSE)

5.3 UTC Institute for Advanced Systems Engineering

6. News on Software Tools Supporting Systems Engineering

6.1 New Capella release: version 5.0.0

6.2 PTC Completes Acquisition of Arena Solutions

6.3 3SL Announces the Release of Cradle 7.6

7. Systems Engineering Publications

7.1 Tools of Systems Thinkers: Learn Advanced Deduction, Decision-Making, and Problem-Solving Skills with Mental Models and System Maps

7.2 Clean Architecture: A Craftsman’s Guide to Software Structure and Design

7.3 What Is a Complex System?

7.4 Introduction to the Theory of Complex Systems

7.5 INCOSE Model-Based Capabilities Matrix

7.6 Systems Thinking: Managing Chaos and Complexity: A Platform for Designing Business Architecture

8. Education and Academia

8.1 Master’s in Systems Engineering Leadership at Worcester Polytechnic Institute Worcester, Massachusetts USA

8.2 New Technology and Team Driven Innovation Certificate Program University of California Irvine (USA) Division of Continuing Education

8.3 Internet-Based Testing for Students and Professionals

9. Some Systems Engineering-Relevant Websites

10. Standards and Guides

10.1 SESA Involvement in Systems Engineering Standards

10.2 ISO/IEC/IEEE 15288:2015 Systems and Software Engineering – System Life Cycle Processes

10.3 SAE International Launches OnQue Digital Standards System

11. Some Definitions to Close On

11.1 Performance Measurement

11.2 System Integration

12. Conferences and Meetings

12.1 The 11th Israeli International Conference on Systems Engineering

13. PPI and CTI News

13.1 PPI’s Updated Definition of Systems Engineering

13.2 PPI Welcomes John Fitch

13.3 Team PPI Attends the INCOSE International Workshop

14. PPI and CTI Events

15. PPI Upcoming Participation in Professional Conferences

1. Quotations to Open On

Having subsystem developers define their own requirements is the engineering equivalent

of anarchy (unless the subsystem developer is responsible, accountable,

and qualified to design the parent system).

![]()

Launching rockets is easy; getting engineers to agree on standards is hard.

Wayne Hale

![]()

Only a crisis, actual or perceived, produces real change.

Milton Friedman

2. Feature ArticleS

2.1 PM and SE Integration – Overcoming ‘Conditioned Thinking’

by

Martin Griffin

Systems Engineering Society of Australia[1] (SESA) Defense and Aero Lead

February 2021

Abstract

Conditioned Thinking is a human trait and although we are all too quick to deny that our decision making is influenced by something beyond our direct control, it is an undeniable fact and if you accept that as a given then you allow yourself to understand, or even appreciate, how others are thinking.

“Tradition, long conditioned thinking, can bring about a fixation, a concept that one readily

accepts, perhaps not with a great deal of thought.”

Jiddu Krishnamurti

“One believes things because one has been conditioned to believe them.”

Aldous Huxley, Brave New World

In the context of project or program delivery, every organization that pursues this type of societal endeavor is inevitably confronted with today’s reality of project failure; most are left wondering, how did it happen? For those aspiring to high levels of process maturity, they undertake ‘lessons learned’ and root cause reviews with resulting process improvement initiatives but rarely (if ever) would the auditing highlight ‘because of Conditioned Thinking’. So as each discipline or Integrated Project Team (IPT) tries to ascertain the process that wasn’t quite adhered to or the point at which reality started to deviate from the plan, unconscious bias is still inherent; without fail the human nature influence starts finger pointing or worse and the already existing inter-disciplinary rifts are further engrained through more process and ‘clearer lines of accountability’ intended to prevent recurrence – unfortunately, these actions only increase the likelihood and perpetuate the problem.

PMI and INCOSE studies from 2011 to 2014 identified the concept of ‘Unproductive Tension’ between the two more prominent disciplines of program delivery, Program Management and Systems Engineering. The issues were comprehensively captured in the book, Integrating Program Management and Systems Engineering [Rebentisch, 2017]. But what these studies didn’t delve into was that each of the practitioners associated with their respective disciplines had been ‘conditioned’ by their training and also by the organizational constructs they were required to operate within; the root cause of the unproductive tension is the coalescence of disconnected view-points and a divergence of technical language.

This paper extends from the previous work presented in the PPI SyEN Issue 72, December 2018,

“Integrating Program Management and Systems Engineering”, which explored the historical evolution of Program Management and Systems Engineering and their subsequent divergence. The focus for this paper is to further explore the paradigm of ‘Conditioned Thinking’ within program delivery; provide elaboration on the conclusions from that earlier work to assist with identification of the limiting constructs within any delivery-focused organization; and provide guidance concerning how to overcome the ‘Unproductive Tension’ resulting from this ‘conditioning’.

Copyright © 2021 by Martin Griffin. All rights reserved.

Introduction

The previous paper[2] surmised that the root cause of poor integration of Project Management and Systems Engineering is actually ‘constrained thinking’. This is an outcome of conditioned thinking whereby the ‘conditioning’ has limited the person’s beliefs and understanding to a constrained and somewhat prescriptive set of processes and allowable actions. In later sections, specific examples of this will be detailed for both disciplines to show how the resulting unproductive tension arises. Further to the identification of this root cause, the previous work identified the following list of integration issues as particular areas to be explored and these will be elaborated throughout this paper:

- Human Nature: Conditioned or Local Mindsets

- Communication: Speaking a different language

- Culture: Process Compliance – Resistance to Change

- Environment: Industry norms – e.g. Rail Standards

- Societal: Business practices – Contracting methods

- Organizational: Organization structure with Role definitions

For the Systems Engineering (SE) discipline, the constrained thinking usually takes the form of automatically (blindly) applying the full process models associated with requirements management and design synthesis, and inevitably gold-plating or over-prescribing system functionality at the expense of budget and schedule.

For the Project Management (PM) discipline, the constrained thinking is regularly exhibited in the forced delivery of immature contract deliverables to achieve a contractual milestone in order to release an associated contract payment at the expense of rework and technical non-conformance, and the inevitable realization of technical risk toward the end of the project.

The unfortunate reality in modern programs is that almost every project experiences both of the above constrained thinking outcomes in parallel and concurrently, and therefore the personal experiences of the teams’ allocated responsibilities associated with either discipline is ‘unproductive tension’. The project manager is eternally frustrated that the technical team continuously attempts to develop technical products and deliverables beyond the cut-off date for peer reviews and quality checks. And the engineering manager is annoyed that the members of the project management team are rushing (and often bypassing) review activities in order to release deliverables which inevitably have to include caveats and disclaimers regarding incomplete technical activities.

When one overlays organizational culture onto this team division you start to see behaviors emerging that can be unique to just specific companies or even industry sectors. For example, project managers being rewarded and specifically singled-out for accolades related to achieving early project milestones through heavy-handed suppression of technical leads, even though the project is on a path to over-runs and reputational damage. Even the simple act of creating program role hierarchies where the program manager is the single point of accountability with direct upward reporting to company directors or Chief Executive Officers (CEOs) and downward line authority to Integrated Project Team leads (usually Project Managers [PMs]) with technical leads relegated in the hierarchy to layers not able to influence the budgets or work packages assigned.

At first glance these aspects may appear to be simply poor role definition or misaligned accountability assignment, but these cultural ‘norms’ within organizations become so deep-seeded that the conditioning of the delivery teams goes beyond their discipline training to become fixed (constrained) beliefs extending from one failed project to the next. Most of those involved in ‘delivery’ programs are all too familiar with statements similar to the following quote from an Australian Company Director of a renowned rolling stock organization when queried as to why the rolling stock could not be commissioned on infrastructure that had been delivered to schedule:

“For all new train projects, especially of this size and complexity, it is not uncommon to experience some

delays as issues are identified during testing and rectified accordingly.”

Now this would seem like a reasonable argument (excuse) if the delays were minor and rectification effort associated with the issues manageable within planned risk margins; however, time and again the media reports on Government infrastructure programs being delayed by multiple years and costing billions of additional taxpayer dollars – this recurring phenomenon suggests that “not uncommon” is a deep-seeded cultural norm. For the project related to the comment, the resulting ‘issues’ that needed to be ‘rectified’ were redesign of the platforms and major modifications to the rolling stock, including creating separate configurations resulting in significant delays to entry into service. Yet the project proceeded past the Detailed Design Review with accolades regarding achievement of an early milestone and early entry into testing.

Thus, at the core of this integration challenge is the ‘constrained thinking’ originating from process- driven technical standards developed by the respective industry representative bodies (INCOSE and PMI). The standards, including ISO 15288 and ISO 21500 (or the more commonly applied Project Management Body of Knowledge (PMBoK) and PRINCE2[3]), are a necessary construct as they enable the ‘principles’ associated with the ‘methodologies’ to be documented in a way to permit consistent application by practitioners, and hence they are common taxonomies and terminology utilized throughout industry. Unfortunately, they also, intentionally or otherwise, prescribe processes to be adopted and adhered to in order for organizations to claim delivery methodologies ‘compliant’ to the standard. The previous paper details how these two specific methodologies evolved and consequently diverged in principles application – this highlights the modern dilemma – the practitioners of each discipline have little to no familiarity with the other’s application. The end result is that today’s project managers pursue execution of the contracted scope of work and deliverable lists at the potential expense of a successful capability. And today’s systems engineers hone and refine requirements until they are not practically achievable within the contracted budget and schedule.

There is a common theme of practitioner conditioning constraining the individual’s ability to consider or adopt other points of view related to any business objective, in particular the delivery of a customer’s needed capability or service. An answer proposed by the PMI-INCOSE-MIT collaboration[4] was to educate the practitioners of the two disciplines to increase awareness of the other’s practices. This might go some way to reducing the ‘constrained thinking’; however, it would not alleviate the ‘conditioned thinking’ and is highly unlikely to alter an individual’s application of their own trained beliefs. The operative critical questions are: what options exist to bridge the chasm, and what can actually integrate the actions and endeavors of diverse multidisciplinary project teams to effect successful delivery outcomes?

Conditioned Mindsets – What were they thinking?

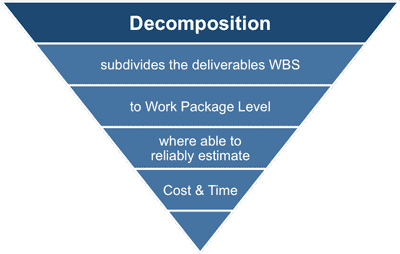

Project Managers are not all created equal and even if they have undergone the same training and certification courses, the on-the-job element of their knowledge development directly influences how they apply that training. Looking at a key PM aspect of work decomposition, which involves converting scoping statements to work packages and the associated Work Breakdown Structures (WBS), most PM practitioners would tackle this task slightly differently. The one consistent theme however, driven by their conditioning, is to create these packages directly from the contract Statement of Work (SOW) in a way that they can be apportioned to a specific team. There is a strong focus on deliverables specifically referenced by the contract (see Figure 1) and a dogmatic view-point of allocating deliverables associated with specific disciplines to those specific teams – for example, a design documentation package allocated to an engineering team deemed responsible for design. This assumes the allocation of responsibility for the estimation of budget and schedule as well.

Figure 1 – Project Management Construct for Work Decomposition

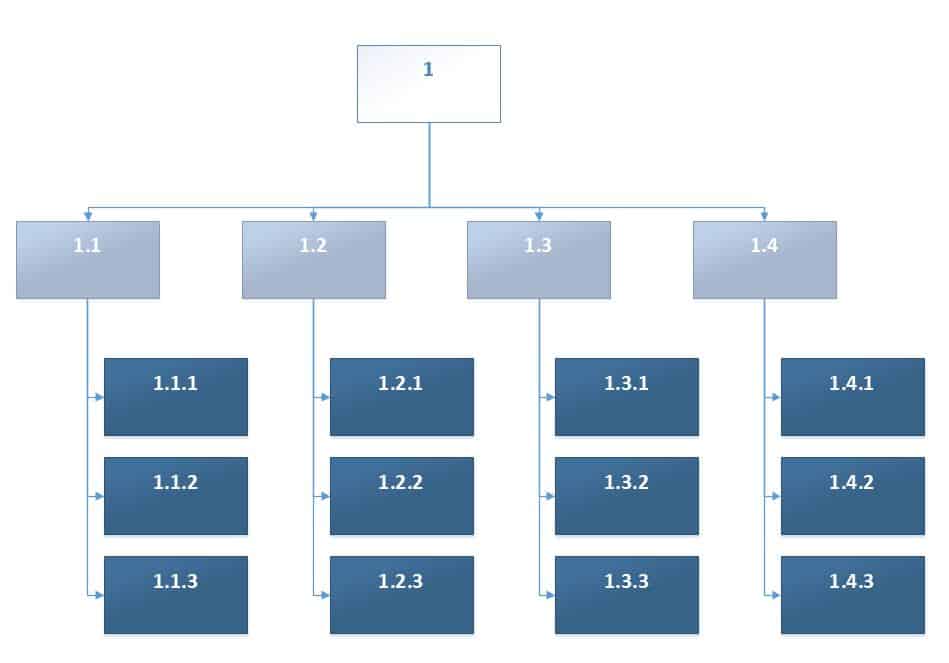

The resulting first-cut of any work decomposition activity using a Project Management conditioned mindset is therefore a siloed and disjointed WBS based solely around project deliverables as specified in the SOW. Figure 2 shows a generic hierarchical WBS – what transpires is that each vertical element gets ‘chunks’ of scope dropped-in that are related to a particular discipline/team. For example, one column would be PM tasks and another engineering tasks and another build tasks and yet another would be verification tasks and so on.

Figure 2 – Generic Work Breakdown Structure Construct

For projects that are large enough to support Integrated Project Teams (IPTs) this same approach is applied and the IPTs that are formed are discipline-based – they become silos within the broader organization, thus defeating the benefit that was originally intended with creating “integrated” teams.

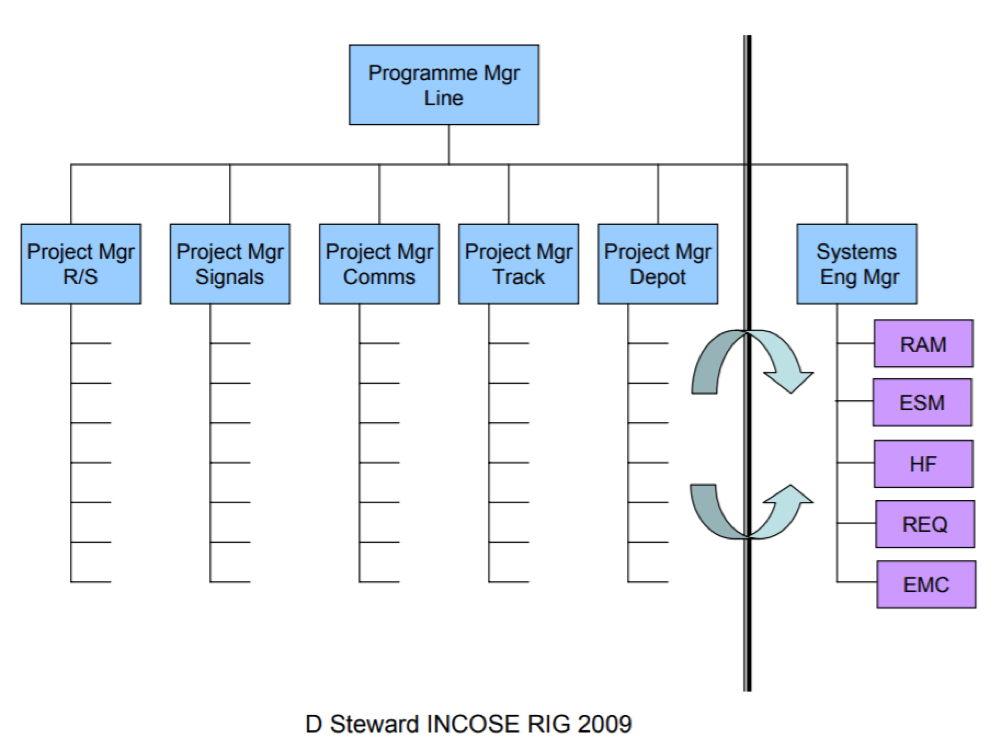

The following example, Figure 3, is a generic structure that usually occurs within the rail industry and clearly shows the conditioned thinking when translating the SOW into a WBS. In this case the scope associated with Rolling Stock (R/S) gets ‘cherry-picked’ out and assigned to the R/S IPT; the scope associated with track and infrastructure gets assigned to the track IPT; and the scope associated with the depot goes to the respective IPT. This conditioning is so engrained that because the SOW mentions that systems engineering is needed, an entire siloed Systems Engineering IPT gets created so that those associated deliverables have a location in which to ‘live’ and someone responsible to deliver them. Consistently, these programs have integration issues at delivery so significant that entire systems have to be re-engineered.

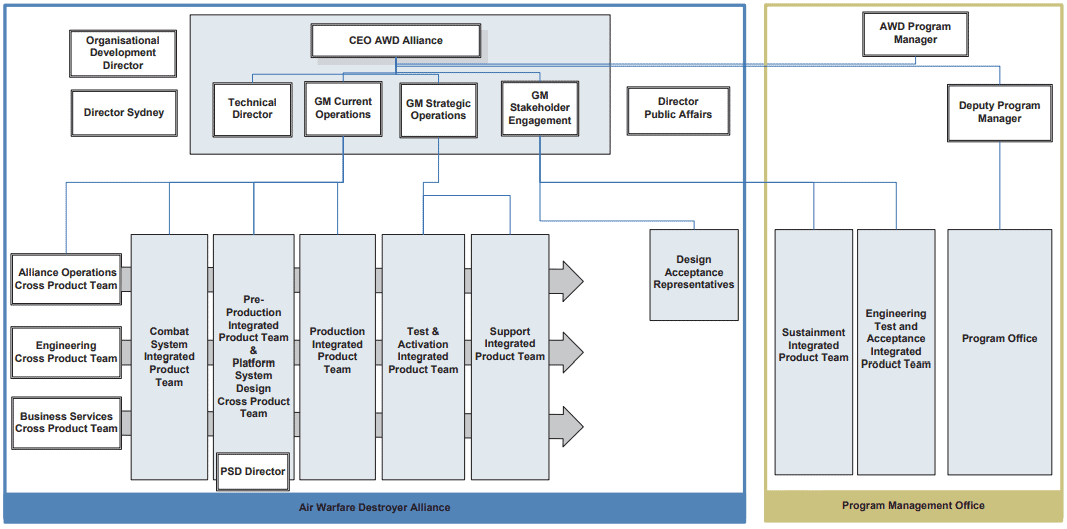

Figure 3 – Generic Representation of Rail Industry IPTs

Now extend the thinking to programs where the work is being delivered across multiple organizations and the conditioning is still so pervasive that the IPTs become company specific and it is so difficult for PMs to consider the concept of ‘shared work’ or IPTs consisting of employees of the different companies. Even when the work scope is highly inter-dependent across these IPTs, the conditioned thinking forces the conjuring of alliance contract models and cross-team teams which don’t actually have any affect at the work package level, because the ‘work’ is not decomposed in a way that permits effective deliveries across the various teams. The recent ‘project of concern’ within Australian defense associated with delivering the three Air Warfare Destroyers (AWD’s) had an IPT construct as shown in Figure 4 and the magnitude of rework and churn and inter-dependence delays directly resulting from this SOW decomposition was mind-boggling. If you look closely you will notice that there is no Systems Engineering IPT even though defense scopes are predominantly built around these principles; but do not fear, the Engineering Cross Product Team got allocated ALL of those deliverables in the early phases and the Support/Sustainment IPTs were allocated the later deliverables. Needless to say these were not actually integrated and most support deliverables were created in isolation from the design ones.

Figure 4 – Air Warfare Destroyer (AWD) Design and Build IPT Construct

Of course, none of the above is helped by the Customer’s formation of the Contract, and it is not coincidental that the disciplines engaged to create the SOW are actually project management practitioners who have all undergone the same conditioning. Thus, contracts that directly link payment milestones to deliverables due at specific schedule milestones are encouraging and actually enforcing the decomposition approach taken by those PMs planning the work. The same conditioning has those Customer practitioners ‘ticking the box’ at each milestone to indicate that the ‘deliverable’ has been received and therefore that gate can be passed even though there is little to no evidence that a solution is converging or that the project outcome will be still achieved.

Speaking a different language – What you talkin’ ‘bout Willis

In any given industry there are specific taxonomies or definitions which are specific to the industry and would not be known by individuals working in other industries. The same can be said within certain organizations where the process models attract internal colloquialisms, and these quite often filter down from customer terminology to the terminology used by the suppliers. Additionally, most (if not all) professions attract their own ‘languages’, where individuals in other professions would struggle to understand the meaning or context of use. Between the two disciplines referenced in this paper, this difference is quite palpable and regularly leads to issues in achieving a successful project outcome. The previous paper explored the historical divergence that has led to these significant ‘language’ challenges but there is no clear resolution to this in today’s programs.

It is important to clarify where the language confusions are leading to ‘unproductive tension’ and/or miscommunications. Both disciplines get engaged under the same contractual mechanisms under any given program, so it would be expected that the contractual language and terminology (used by a customer) would take precedence and therefore avoid these inter-discipline issues. What actually transpires is that the Contract uses mixed language because the individuals putting the SOW together are practitioners of each discipline who don’t understand why the other is referring to a particular term. Further the Contract infers responsibility and/or accountability for certain scope and deliverables which gets interpreted as ‘done in accordance with that discipline’s processes’. The Contractual nuances will be explored later in this paper; however, some specific areas causing confusion are listed below:

- Data Management vs Configuration Management vs Contractor Deliverable Requirements List

- Major System Reviews as part of the Systems Engineering Program

- Contract Master Schedule vs Engineering Schedule

- Function and Performance Specification vs System Specification vs Product Specification

- Monitoring and Control vs Measurement and Analysis vs Earned Value Management (EVM)

- Contractual Requirements (for example, Statement of Requirements) vs Technical Requirements

Even when the Contract is clear with its references or definitions of terms, what gets actually submitted by a company as a ‘compliant’ proposal can lead to language issues. For example, the contract may have required a Systems Requirements Validation activity and also requested an initial draft of a System Specification (which would normally be an output of the activity). The company’s submission may assume that their proposed solution is ‘accepted’ by the Customer as compliant to the System Specification if they get awarded the contract, and so the PM starts planning to build that product, but the Engineering Manager is planning to analyze the requirements and modify the product where not compliant. Another potential outcome is that the Engineering Manager begins requesting technical data related to a product to produce Objective Quality Evidence to support a compliance statement but the PM over-rides the request to the sub-contractor stating that the compliance is assumed.

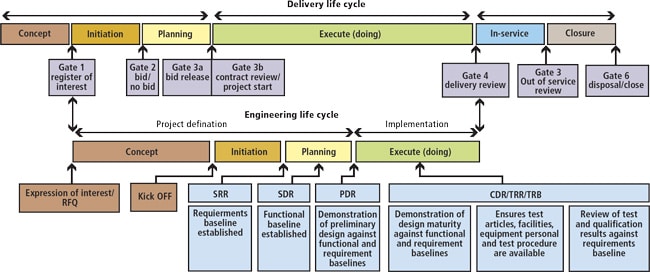

The majority of language issues originate from the processes described within the particular standard used to train (and thereby condition) the practitioners. These same issues exist even within the disciplines themselves, based on what training or certification the person has. Within PM there are process differences between ISO 21500, PMBOK & PRINCE2, leading to slightly different approaches taken by these practitioners and within SE there are differences between ISO 15288, EIA-632, and others such as the obsolete MIL-STD 499. The project lifecycle models shown in Figure 5 have a Systems Engineering model based on EIA-632 which references a Critical Design Review (CDR) which has a significantly different objective then a Detailed Design Review (DDR) which is more prominent within the ISO/IEC 15288 approach. To a PM these two review types are exactly the same and this is one of the most prevalent areas of unproductive tension within a delivery project. In this instance a CDR triggers an approval process with the Customer to permit the ‘start’ of production activities and may require a number of prototype builds to have occurred and undergone testing to validate design decisions or to de-risk some unproven technology. This would usually be followed by a Production Readiness Review (PRR) at which the detailed drawings and production prequalification data would be presented to get approval to start building or installing. A PM can easily mistake a DDR to mean a similar thing, only to be faced with a customer demanding detailed production drawings in order to ‘verify’ the design.

Figure 5 – Traditional Representation of Phases and Review Gates

Process Compliance

On large projects of long duration and with many teams working to produce the required outcome, having well developed and detailed processes is necessary to ensure consistent and effective delivery and to assure an acceptable level of quality of the related deliverables. Even on shorter duration, agile team projects, having processes to work to provides a level of confidence both internally for the team and externally to the customer. The challenge, of course, is to ensure that the processes adopted are suited to the type of project and are able to dovetail into any external dependencies (for example, product suppliers) and also with the customer as the end recipient of the project’s outputs.

For the most part, a company supplying to a customer will have established their processes to ‘mirror’ those of their customer. So a company who delivers projects to customers who use systems engineering for their capability definition and whole of life support, would likely have project lifecycle models developed based on systems engineering principles. These companies will also have adopted the SE approach of ‘tailoring’ whereby certain process steps will be mandatory to ensure a legal obligation or an auditing need is met, while others will be allowed to be tailored within certain guidelines or parameters. Unfortunately, this tends to be done at the commencement of a program and based on the Contract SOW and turns out to be not suited for later parts of the program, or worse, that it includes process steps just because the SOW referred to it but there is actually no scope requiring the process. Also, through the course of a Program, many employees join and leave and so the original tailoring basis is lost. Quite often, the processes applied to a project are just taken as gospel from the company’s Business Management System (BMS) and are applied on the basis of ‘this is a requirement of the BMS’. Another regular occurrence is that during a project quality audit the quality team identifies BMS processes that they believe are applicable (because they are being used on all the other projects) and they put a Corrective Action onto the program which gets executive level visibility and the PM team just agrees to add it in order to make the issue go away.

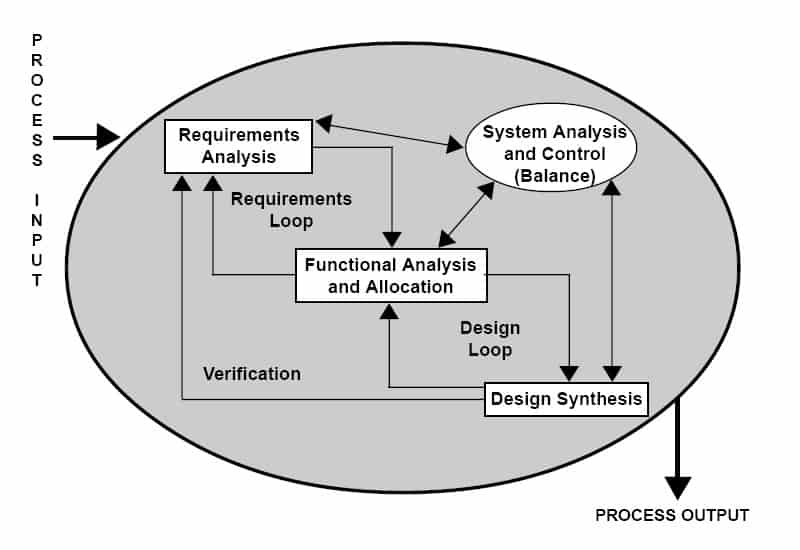

So at the first process layer for any Program there is only a low risk for mature organizations of incurring unproductive overhead related to process adoption. However, at the next layer or lower levels of process, the conditioned thinking begins to ‘kick-in’. When looking at the application of System Requirements Validation (refer to Figure 6), the company’s BMS might have clearly defined processes identifying the tools to be used (and even when certain tools requiring dedicated skilled users should be applied) and it might detail the required level of traceability between customer specifications and final product specifications. But at the next level of process where the method of requirements derivation and design synthesis occurs, the BMS would likely just point to some best practice or Community of Excellence (COE) examples from other projects. It is at this level that the expertise of the individuals appointed to the applicable role becomes relied upon and these processes or work instructions get developed specifically for the project. And it is here that the conditioned thinking causes a compliance mentality that constrains programs and can lead to requirements being developed that are not actually implementable with the concept solution or not verifiable because they are unbounded or untestable.

Figure 6 – Traditional Requirements Validation Process

A very common occurrence is an unwavering belief that FPS requirements must be taken through to the System Specification even if that requirement is listed as ‘desirable’. Worse still they get ‘copied’ word-for-word into the specification and no analysis or feasibility studies get performed because this part of the project is schedule constrained by the PM because it is seen as a purely paper-based activity. The PM team is expecting the responsible engineers to produce contract deliverables (not un-scoped analysis papers) and generally aren’t aware of the technical implications associated with this activity because they are purely focused on achieving that project milestone. If the engineering team raises any concerns that the requirements may be difficult to achieve, they will quite often be told to not raise any issues with the customer at this early stage because it might infer that the proposed solution wasn’t suitable, and everyone proceeds ‘hoping for the best’ (although the ‘Unproductive Tension’ increases). If the project ‘gets lucky’ and the particular requirement gets transferred into an external SOW to be tendered by Sub-Contractors, they may get informed that the requirement is not achievable or get an unexpectedly high quotation, identifying the over-prescribed requirement. But more often this slips through the cracks until the product is built and it has reached the testing phase and found to be ‘non-compliant’.

Another trend that has been emerging in recent years is the transfer of processes from one industry to another. With the realization that programs are becoming more complicated and even complex with interoperability across systems and the interdependence between systems (for example, Industry 4.0), industry sectors that previously hadn’t used formal systems engineering, are now adopting a more formal approach. To achieve this, they are recruiting experienced engineers from sectors familiar with these principles (for example, transport is recruiting from defense) and asking these practitioners to create processes to integrate into their existing business practices. In reality what they are doing is inheriting conditioned thinking. For the example of transport inheriting from defense, they are inheriting a legacy of over-prescribed MIL-STD process that is tolerated in the defense sector but is really not suitable for overlaying on the siloed, standards driven, transport sector.

Industry Norms – designed to standards

Rigid adherence to design standards is another form of conditioned thinking, and in the same way that compliance to process can overly burden a project and drastically increase the unproductive tension between SE and PM, so too does a culture insistent on complying to standards. The original creation of these industry standards (or perceived industry norms) was generally a reaction to safety incidents or serious accidents or as a means of capturing and sustaining best practice and ‘rules of thumb’. They were first introduced as conditions of contract solely between the customer and supplier. In most industries or sectors they evolved to become treated as ‘the bible’ for a particular discipline on a specific design topic. The more prolific application can be seen in the construction, railway and automotive industries, but they are also prominent within the sectors of healthcare and maritime. As a result of these standards becoming ‘adopted’ as the ‘industry norm’ for any given application, they require ongoing maintenance and upkeep – this spawns technical societies and safety-boards whose primary role became the administrators of the content and currency of these standards. In some specific scenarios the industry regulators (safety or operations) inherited the responsibility and those standards attracted a further stigma of requiring compliance in order to achieve accreditation to use or operate the system, plant, facility, or full capability. Each country recognized a need to ‘police’ this activity, since most organizations (especially Government organizations) sought to take advantage of existing standards; thus associations (like Standards Australia) were established and then affiliated with other global groups under defined charters.

It is important to recognize the efficiency gained through common reference to an ‘accepted’ standard; however, it is far more important to recognize that those standards were developed to a meet a specific need under specific conditions and should only be used as a source of applicable requirements to ‘adopt’ on a Program for any of the lifecycle phases of design, build, operate, and dispose. The all-too-common occurrence in any project is to take an FPS statement such as ‘the system shall be compliant to the electrical wiring standard AS3000’ and directly copy that into a system specification and duplicate it to all of the product specifications. This immediately triggers ‘conditioned thinking’ from all affected practitioners. For example, the product supplier confirms ‘our products are AS3000 compliant’, the electrical designer completes a set of electrical drawings using their AS3000 training, and the installer ensures they wire and protect all equipment using the AS3000 principles. And then, during commissioning, the testers alert the identification of numerous earth faults, and worse still, a commissioning technician receives an unhealthy dose of electrical current when they lean against a perfectly AS3000 earthed, electrical cabinet. Everyone missed that the ‘ship’ was configured as an ‘insulated neutral’ distribution network, since they were too busy procuring, designing, and installing in accordance with the standard.

As a design authority, the number of conversations had with design practitioners who state, quite passionately, “It is safe because it is compliant with Standard X” is quite concerning, but in some industry sectors this is the ‘Norm’ and it is the design authorities who are making that statement to regulators. It is therefore easy to understand why regulators are moving (quite quickly) to a position of requiring ‘Assurance’ from designers and integrators because these organizations were all too eager to say ‘we designed/built/installed to the “standard” requested by the regulator’. The only problem with requesting ‘Assurance’ is that this has a multiplication effect on the ‘unproductive tension’ within the delivery team. The PM’s have not altered their work decomposition approach (except for potentially introducing an additional work package called ‘Assurance’), nor have they sought to understand what additional ‘non-scoped’ technical activities may be needed, and it would be quite rare to find a contract clearly differentiating the type of deliverables needed as a result of this regulator side-step. And the technical teams (including systems engineers) still reference their ‘religious’ standards in sweeping (usually unsubstantiated) compliance statements, and now argue about what ‘Assurance’ actually means. But the organizations that are now ‘exposed’ to potentially increased liability and that can no longer seek comfort in the regulators ‘advising’ what to do (because they are now acting as auditors), apply yet another layer of governance by creating or appointing Assurance Managers and usually an entire Assurance team.

A recent example of the pitfalls associated with designing to standards in the new regulatory environment is the Boeing 737MAX disasters. Due to legal sensitivities it is not possible to elaborate on the specifics associated with this case study however the recent technical review into the Boeing 737 MAX accidents (Joint Authorities Technical Review (JATR) – Boeing 737 MAX Flight Control System – Observations, Findings, and Recommendations) uncovers many of the cultural norm issues discussed in this paper and it is publicly available.

Business Practices – Contracting Methods

Because the preceding sections suggest the need for continuous improvement of delivery programs, it would seem appropriate to identify a few specific areas of where organizations have delivery challenges, and then offer suggested Corrective Actions (in accordance with ISO9001) for executives to ‘pass-down’ to their lead teams to enable them to roll-out a Change Management program (ADKAR[5] is gaining popularity) to monitor progress. However, when a significant contribution to the PM-SE Integration challenge is caused by the customers who are requiring the delivered outcome, then it becomes apparent that modern day business paradigms, that now resonate like broken records, are unlikely to be able to bridge the rift within most delivery organizations.

Contracting methods come in a vivid array of types and styles and can also vary significantly across industry sectors. They can range from Fixed Price with Fixed and explicit Scope through to Time and Material with variable scope agreed at set gates. For a large number of public infrastructure delivery programs, the current preferred method is Public-Private Partnership (PPP), which in its own right has numerous constructs for actual delivery of the required outcome. There is generally one aspect which is consistent, regardless of the contracting method – the Need Statement or Statement of Requirement which defines or describes the required capability or service to be delivered.

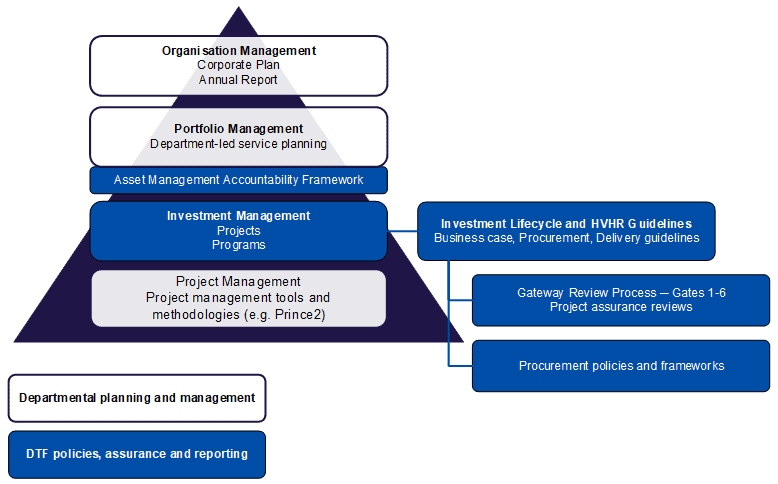

For most industry sectors, there is a common approach preferred by any given customer, which is based on how that customer manages its lifecycle approach to its assets or resources. In the Australian Defense Force (ADF), there is a Capability Life Cycle (CLC) model, which is supported contractually by the Australian Defence Contracting (ASDEFCON) framework. For Australian Government, it tends to be managed at the state level with Victoria using the Investment Life Cycle and High Value and High Risk Guidelines (refer to Figure 7) which defines capabilities. Victoria has also established the Office of Projects Victoria, which is intended to provide independent advice and project monitoring to achieve successful project delivery. All of these Lifecycle models have a dependence upon project management, and the contracting mechanisms explicitly require formal PM to be undertaken.

Figure 7 – Victorian Government Investment Planning and Management Framework

As highlighted in previous sections, the dependence upon PM practice means that PM practitioners are engaged to develop and construct the contracts; due to their conditioning, they create Statements of Requirements that explicitly require ‘deliverables’ at key milestone gates and link payment of the contract progress to acceptance of these deliverables. Even for quite mature Lifecycle models as found in the ADF where Operational Concept Documents (OCD) are produced that define the capability outcomes in very clear terms, the contracts, including payment and penalties, are inextricably formed around deliverables at Mandatory System Reviews (MSR). There is reference to achieving the intended outcome and sometimes direct reference to the OCD, but without fail the Contract is linked to delivering documentation and material procurement with penalties able to be applied if the MSR milestones are delayed. Thus, the dutiful PM’s engaged to manage the contract follow their training and focus on getting deliverables out the door and exiting MSRs with the minimum of conflict. After all, they are nominated within the company organization structure as the ‘accountable’ person and they are directly pressured when the cash flow is not to plan or a large milestone payment slips outside of an annual planning cycle. Inevitably the schedule is reported as on track, right up until the point that the delays can no longer be ignored/hidden and the capability just can’t be fielded the way it was planned.

Organization Structure

The structure of an organization has a direct effect on how projects are delivered, and most organizations will vary their structure quite often (for example, every few years) as the nature of work being conducted changes. For example, a ship building organization that engages in large defense contracts may swing to a heavy project-based structure as programs are won and ramped-up and then back to functional-based constructs as the large program completes and resources need to be ‘shared’ across smaller programs. Or a similar sized ship building company that concentrates on the commercial market and consistently produces a multitude of ship variants, may use a functional construct which changes to a Matrix style varying from strong to weak Matrix as contracts are won and completed.

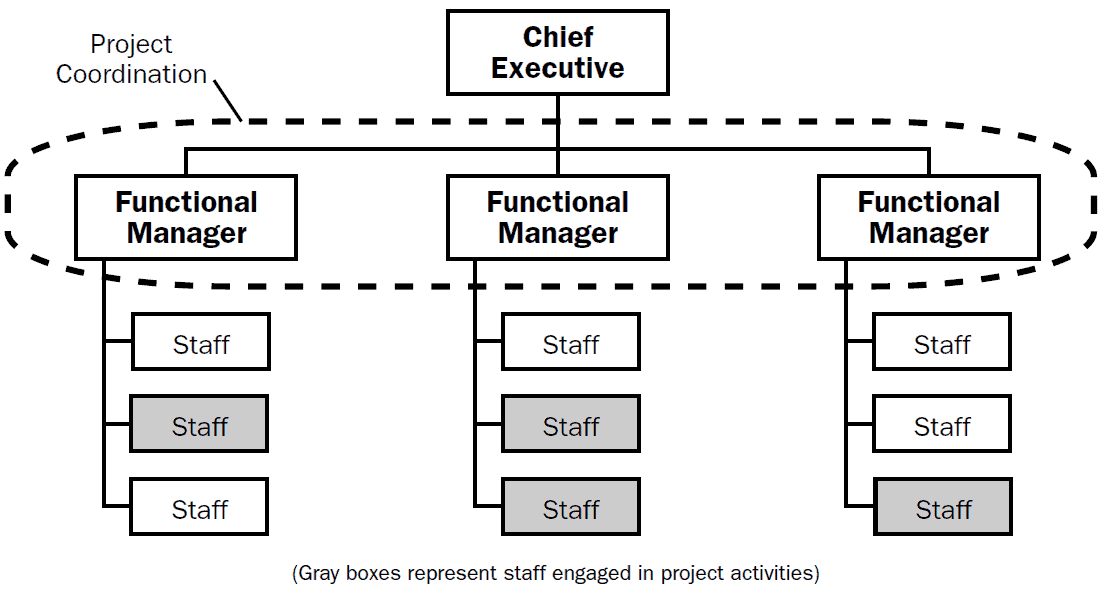

The following models show the generic concepts of each of the construct hierarchies with project delivery indicated by the dotted lines. A brief description of how each is used is provided with a high level description of how conditioned thinking can surface within these environments and how that impacts on unproductive tension between PM and technical leads (particularly systems engineers).

Figure 8 – Functional Organization Hierarchy

The functional structure is prevalent within companies that have a large number of usually less complex programs of work and where that work effort contributes either to the Company’s overall objective or the cumulative completion of work output. Each Functional Manager is aware of all programs occurring within the company and distributes and balances their allocated resources across each project within those programs. These managers are also responsible for understanding the level of expertise required to be maintained in order to deliver on any upcoming planned works.

In these structures, PMs can actually be one or more of the functional leads who are allocated specific projects to manage and deliver. Generally, if a dedicated PM is engaged, then the model is transitioned to more of a Matrix style (see below), where the PM would then engage each functional lead to secure access to specific resources. For purely functional hierarchies the vertical divisions (functions) can readily succumb to silo mentality and try to apply a ‘one-size-fits-all’ procedural approach which can be effective for service delivery but rarely for project delivery. The unproductive tension is not just between the PM and technical lead, but also between silos, and conditioned thinking can lead to entire functional groups being bypassed or even that related scope being outsourced to contractors.

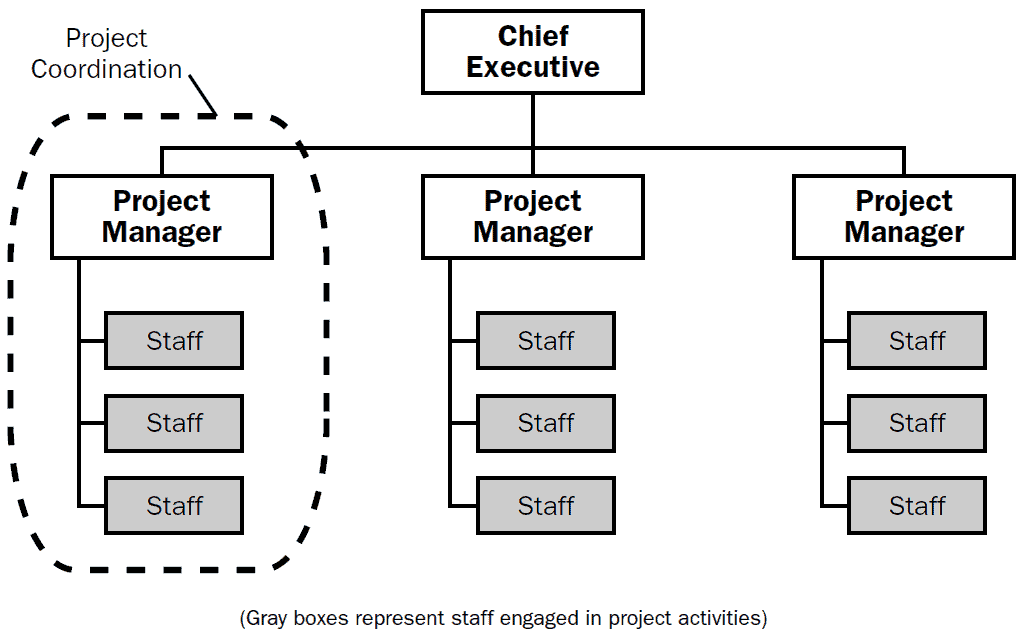

Figure 9 – Project Organization Structure

Within a project-based hierarchy, a series of relatively independent project teams (which can take the form of IPTs) are established, and the program portfolio is distributed among these teams. For larger, purely project delivery organizations, this structure ends up as separate teams per company contract. This is usually seen as an efficient way to organize for project delivery, since the teams can be trained specifically for the contract requirements, and processes can be tailored for the defined scope. Unfortunately, what tends to occur is that teams roll from one contract onto the next and replicate all of the process from the previous contract and adjust (rather than tailor) the processes. The PM’s are given complete autonomy to hire and fire staff and will regularly ramp up new resources to meet their emergent needs, without regard of the needs for resources of future projects.

In a Project based hierarchy the conditioned thinking comes with the resources engaged, either from prior contracts or from prior organizations. A very common result is ‘reinventing-the-wheel’ where the project teams are so disconnected from the broader organization that they develop their project plans in complete isolation and usually from first principles of their respective domains. The unproductive tension in these structures is the result of independent planning within the teams themselves because the PMs will develop their own plans and delegate to discipline leads to develop their own plans. In these companies, it is quite common to see multiple processes running in parallel that are trying to achieve the same thing (for example, PM lifecycle, SE lifecycle, logistic/support, production, and asset management).

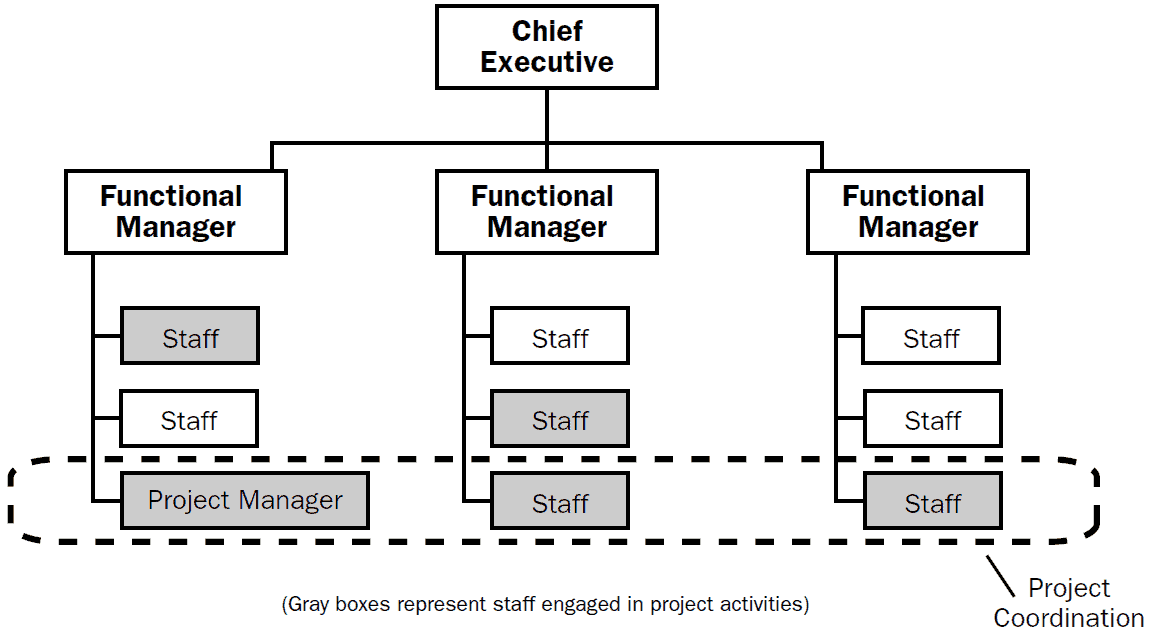

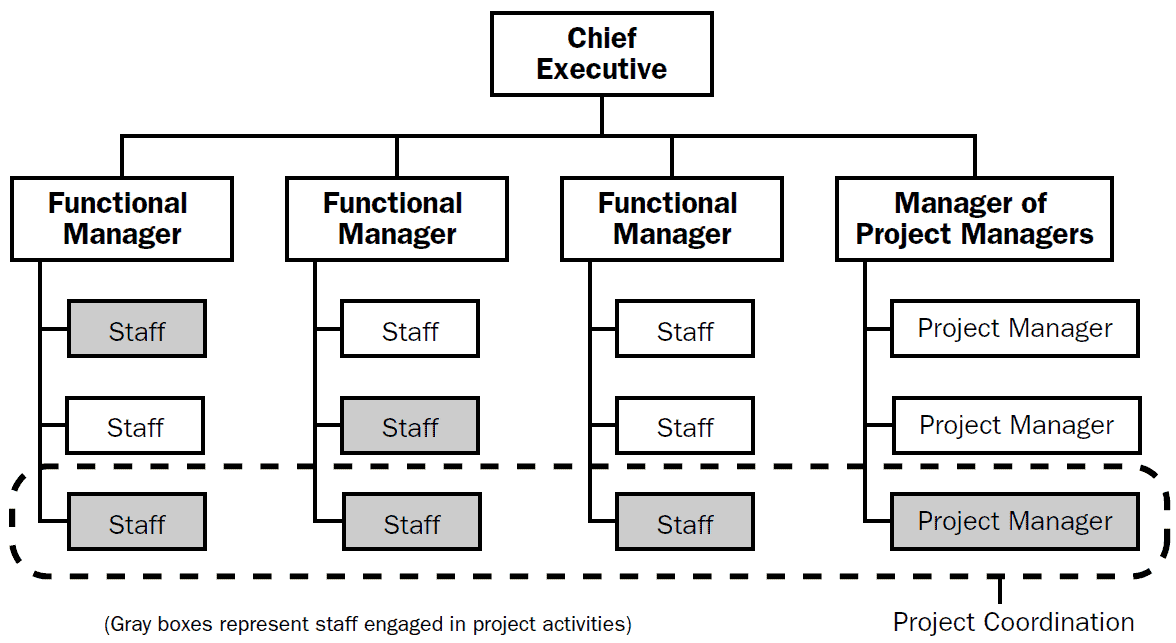

Figure 10 – Matrix Organization Structure

This Matrix model is effectively a hybrid of the two previous models, and the term “Matrix” results from the ‘vertical’ and ‘horizontal’ teams that exist at any given time within the organization. Generally PM’s are ‘assigned’ from within a vertical function and are tasked to ‘build’ a team from resources across all of the functions; resources generally get allocated for 100% of their time for the period required. With this approach, once resources are no longer needed on a specific project, they return to their functional ‘home’ to resume functional-based tasking or be reallocated to another project. These structures get referred to as ‘weak’ or ‘strong’ matrix depending on whether they are more functional or project-based respectively. As an organization trends toward a strong matrix hierarchy they can actually create further vertical ‘cells’ that are purely project focused (see Figure 11). This is in essence a Project Management “Function”, and although run as a more project-based structure, it can suffer the same siloed mentalities observed in fully function based hierarchies.

Figure 11 – ‘Strong’ Matrix Organization Structure

In a Matrix structure, the conditioned thinking can occur in any variant of the previous models and at any time and can be a combination of engrained functional process within silos and the individual lead approaches from prior contracts or prior companies. The unproductive tension is equally as variable, and can be as simple as the assignment of a technical lead with a PM who does not agree with the other’s approach and carries ‘history’ from previous projects.

The three organizational structures highlighted above can be allocated as follows to the specific cases noted in this paper:

- Generic Rolling Stock structure (Figure 3) – Functional hierarchy

- AWD build program (Figure 4) – Matrix (favoring IPTs)

- Boeing 737 MAX re-engined upgrade (Figure 7) – Strong Matrix

Therefore, the type of organizational hierarchy does not provide an immediate solution to managing conditioned thinking nor the resulting unproductive tension, and every structure has its pros and cons for both efficient and effective delivery of projects. The challenge actually lies in the allocation of “accountability” and this, in itself, has another representation of conditioned thinking. CEO’s and equivalent executive decision makers in modern organizations have at some point undergone “leadership” training and a large number will have completed MBAs. This training, like that of a PM and an Engineering Manager, is established against ‘recognized’ standards and tends to be focused on financial management of the organization. There has been a significant focus applied to business growth particularly in terms of returned profit and year-on-year increased sales. And unfortunately this tends to ‘flow-down’ through the organization in the form of personnel goals and objectives and gets placed on the PM allocated to deliver the assigned projects. When looking at any organization, it can be quite difficult to determine who has the accountability once you go beyond the Program Manager (or Functional Manager in a functional structure). More so, the difficulty of separating the executive authority from the technical authority is quite often impossible. A large number of project delivery organizations appoint Chief Engineers who are believed to hold the technical authority for any assigned project or products; however, when looking at role responsibility, the position is an auditing role confirming that all business processes were followed and based on the data available the system is fit, form and function, and safe to operate. In reality all of the decisions are being made by the ‘accountable’ PM to ensure that the project achieves its milestones and release the related payments

Recently Elon Musk was interviewed during the Wall Street Journal (WSJ) CEO Summit where he highlighted that CEO’s are not focused on the right things to achieve the growth to which they are aspiring. He noted that “A company has no value in and of itself. It only has value to the degree that it is an effective allocator of resources to create goods and services that are of a greater value than the cost of the inputs.” Relative to the concepts described in this paper, he is indicating that the organization structure is important as it is the way the organization allocates its resources to create the products.

When looking at the 737 MAX program structure within Boeing, the issues encountered all have pointers back to how Boeing had restructured into a Strong Matrix hierarchy. The members of the executive board of Boeing were focused on returns to shareholders, and restructured to place accountability for project delivery with the program leads. The decision to proceed with the 737 MAX was against technical guidance but was forecast as only 10% of the cost of designing a new product. The technical decisions were overtaken or even over-ridden by the program leads and the technical heads of the various functional areas had no direct control over the technical resources. The organization promoted leadership attributes and retained the members of executive roles even though most programs were failing to deliver and some were considerably behind schedule with products being delivered with poor quality. So “Accountability” was so dispersed that it was seen and internally advertised as ‘shared accountability’ potentially to create a buffer between the transactional and executive level of the organization.

Finally, once the organizational hierarchy is established and roles assigned, the next challenge is role responsibility. Each role will have some form of Position Description (PD) associated with it that permits recruitment but also serves as a means for individuals to understand their authority and responsibility within the project or even the organization. These tend to be quite generic in nature and rarely get tailored for a particular role within any allocated project, especially for functional constructs where they are more definitions of experience level. For most industry sectors, the customers request a clearer definition of project roles within the operating plans, and regularly these would conflict with an individual’s PD, leading to further unproductive tension. The current initiatives associated with improving the integration of program management and systems engineering are making an attempt to more clearly differentiate responsibility between roles within a program. The work within INCOSE UK associated with creating guides for the application of systems engineering has developed a specific view of how program management and systems engineering can ‘work together’ during each phase of the delivery lifecycle (refer to Guide Z11 Issue 1.1, Jan 2018). This is a great step toward reducing some unproductive tension between these practitioners without disrupting their current conditioned thinking but it doesn’t actually promote the ‘true integration’ of the associated processes and this will always be complicated by the organization’s role definitions and expectations.

Overcoming ‘Unproductive Tension’

This paper has described the issues identified in my 2018 paper associated with why it is so difficult to integrate project management and systems engineering within programs. The singular recurring theme explored is that of ‘conditioned thinking’ which generally leads to ‘constrained thinking’ and inevitably ‘unproductive tension’ between the delivery-agents of an organization. The content provided is intended to aid the reader in identifying where this ‘conditioning’ might surface and highlight why this inevitably causes projects to ‘derail’ and fail to achieve their original objectives. The remaining question is ‘what can be done to reduce or eliminate the resulting unproductive tension’?

Unfortunately, there is no easy answer to this as we are all human and we work within the constraints that this brings. As humans we become passionately attached or even committed to our learned beliefs and our first reaction is always to reject what does not fit with what we believe to be “real”. So when one’s delivery organization consists of several different disciplines all with different perspectives of what is ‘real’ but all being driven to ‘comply’ with their own related standards, then it is no wonder that activities become disconnected and the tension begins to rise.

Each project within the organization “must” adhere to each of these business processes and so you have a set of Project Management processes, a set of Systems Engineering processes, and a set of Asset Management processes all running in parallel (but not quite in sync); and having Quality Management, Finance, Supply Chain, and a raft of other business ‘functions’ layering their processes in addition and demanding that their hurdles receive the same amount of attention or they won’t ‘service’ your requests. And then somewhere amongst that quagmire of evolved process someone says “the product is non-compliant” and the customer says “we will not accept it until compliance can be ‘assured’”.

And so you stop, and you scratch your head, and you wonder ‘what were we meant to be building?’ One understandably feels the pressure that it doesn’t matter, so long as we are compliant to the processes and we provide required deliverables at the payment milestones. It should not be a surprise then that when one gets to testing of the final integrated system, numerous interdependencies have been overlooked and you ‘experience some delays as issues are identified during testing’.

One area of Project delivery that can assist with overcoming these issues is during Plan development. Rather than the usual approach of each discipline lead heading off in a silo to develop their ‘own plans’ in compliance with their ‘mandated processes’, you could suggest a workshop where the Capability is decomposed into all of its constituent parts and each responsible manager discusses how those parts will be achieved. And then write the Plans ‘together’, with a common, unified set of processes; and restructure your organization (or at least your Project) so that the higher risk areas have individuals assigned with clear accountability for delivery and direct communication with interdependent aspects of the program.

Further to developing Plans that can actually be used to manage the project’s objectives, the following are some topics that can be addressed to assist with overcoming our conditioning:

- Accountability requires ‘authority’ to make decisions and full control of allocated budgets including all risk contingency related to the scope. It also needs direct engagement with any stakeholders (internal or external) who have influence or dependence on the task inputs and/or outputs. This includes all resources allocated to the associated Work Packages. The concept of ‘shared accountability’ has evolved in relatively recent times due to a combination of organization structures and ‘leadership’ training and should be avoided since the result is a lack of accountability. Delegation of accountability is another misnomer and is confusion of delegation of responsibility (or delegation of Authority).

- Schedules should reflect the content of your Plans and be based on ‘actual’ work to be done. Having schedules based on Full-Time-Equivalent (FTE) ‘by-the-name’ resourcing is absolutely useless. This includes all ‘overhead’ roles within a project, especially the Project Management Office, because if they are scheduled as FTE then they are wasting money (you just don’t know how much). ‘By-the-name’ resourcing should not be practiced because it results in FTE based schedules.

- A common, unified set of processes for any tasks identified as necessary to yield the final capability is essential. Avoid having discreet discipline-based processes, and if any process exists that is not applicable to all team members, then it likely is not required or, at least, it should be reviewed for relevance in delivering the actual outcome. An example is PM meetings and systems engineering reviews – these are in reality project maturity gates, where all active and upcoming tasks are assessed for completeness and residual risk (technical and programmatic). Also, there should be no separate functional ‘support’ processes; rather, these need to be integrated into the project processes (including recruitment and staff management, finance, supply chain, procurement, quality control, etc.).

- Finally, with regard to the significant concepts raised in this paper, the project role structure needs to align with the work to be completed. This is one of the most difficult challenges to overcome; however, taking steps toward a more effective delivery structure should help to reveal some of those long-held beliefs and allow bolder changes on future projects. When workshopping the Capability decomposition, avoid thinking in terms of disciplines required; rather, apply an approach similar to the Product Breakdown Structure (PBS); Work Breakdown Structure (WBS), and Organizational Breakdown Structure (OBS) sequence that is described in most delivery-focused standards. Every Product or Capability requires a sequential buildup of elements into sub-systems, which are then integrated together to form functional systems, and finally into a Capability. The majority of Capabilities can be planned as iterative releases until a final Capability is achieved. At every stage of this development, integration, implementation, and release ‘cycle’ the organizational structure should be ‘designed’ to complete the work. For example, consider having a test team that has members of the requirements team and vice-versa, so that requirements get developed that are testable and the interpretation is available within the test team. Consider extending that thinking to the high-risk areas of the project by creating a structure where the logical set of work packages has appropriate resource types (and expertise) assigned, and the resulting Cost Account (CA) is allocated to an ‘accountable’ lead. If the dominant risk is technical, then that lead should be technical; and if it’s programmatic, then a PM and so on.

It’s my hope that you are now thinking more broadly about the application of skills and knowledge on any given work package. Also, that ‘true integration’ is the collaborative engagement of all disciplines to achieve the desired outcome. Referring back to the approach adopted by INCOSE UK utilizing the Z-Guides, it should be clearer that this is a “reaction” to the decades of conditioned thinking, whereby the disciplines “carve-out” their piece of the projects work package. This may allow some division of role responsibility, but it will never eliminate the ‘unproductive tension’, because, as an example, the PM will not ‘understand’ the Configuration Management needs of a program because they see it as an SE responsibility. Also, the SE would generally say the PM hasn’t provided a suitable schedule to achieve the technical output. A more integrated approach would have every discipline contributing to the Risk Management, Configuration Management, make/buy decisions, schedule management, etc., and the “accountable lead” for the Cost Account is responsible for authorizing the approach taken and de-confliction of the multiple perspectives.

Conclusions and Recommendations

For the six specific aspects noted in the introduction to this article, following is a summary view of what needs to occur in order to affect true integration of program management and systems engineering.

- Human Nature – we are all human so accept it and approach every project or delivery program with the understanding that all involved (including Customers and Regulators) have been ‘conditioned’ and are not ‘thinking clearly’ and will resort to learned beliefs and cultural norms. Subtlety is critical as ‘people’ will instantly reject any suggestion that deviates from the norm. 2021 is a year that delivers one of the ‘key levers’ to affect change within a currently PM centric delivery program model and this is through the release of the new PMBOK 7th Edition. This new release has been directly influenced by the PMI-INCOSE-MIT collaborative research work and involves a significant deviation away from descriptive process and deliverables focus; it is Principles-based and outcome-focused. Steps need to be taken to help the PM community understand the critical importance of strengthening PM and SE integration.

- Communication – During contract planning and throughout delivery, avoid using discipline specific language and use generic language related to the actual status of your contract and program. It is not a CDR nor a Critical Design Review – it is a Project Maturity Gate (PMG); its purpose may be contractually different from one project to the next. The intent of this PMG is to assess whether the design is mature enough to proceed to the next stage. Providing all contract deliverables is not sufficient. We need to provide expectations based upon the actual status of the project or program.

- Culture & Environment – The purpose of having standards and process is to facilitate the efficiency and effectiveness of project or program delivery. The standards and processes need to be assessed for applicability and suitability. If a Customer infers the necessity for compliance to any particular standard or process, that inference should be verified for validity for the specific project or program. It is quite likely that some aspects of any standard or process are not applicable or appropriate for a particular project or program. Safety and Integrity can only be ‘assured’ when the foundations are ‘verified’. Designing to a Standard does not in itself ‘assure’ anything. If your program is independently monitored by Regulators then it is even more important to ensure that the applicability of standards or process is confirmed.

- Societal – standardization is a current societal ‘habit’ and for the most part it does make life easier for subsequent activities or endeavors; however, more often than not, it builds in complexity. These days, contracts are ‘template-based’ and the referred standards are a part of an ‘industry library of standards’. So now more than ever it is critical to understand what the ultimate objective is and to develop your plans and procedures to deliver that objective. Many industries are realizing this dilemma and revisiting their contracting models in an effort to adopt more collaborative contracting arrangements – those involved need to overcome their own internal conditioning. If one focuses on Capability and outcomes, then the red-herrings in contracts can be identified and resolved more effectively.

- Organizational – companies, especially large ones, need to adopt a hierarchical construct of some form in order for effective decision making to occur and allow dissemination of those decisions to filter through to all employees. Do not fall foul of the resulting biases that those constructs embody. Particularly important is to see beyond the management rhetoric that has emerged with current business practices. Business psychology has become an industry unto its own with leadership programs and employee engagement surveys proliferating most organizations. It is important to realize that these activities are intended to provide a level of engagement that promotes employee accountability within organizational structures. If your organization or project has a traditional Line managed hierarchy, then any accountability is likely ‘shared accountability’ which in reality means that ‘no one is actually accountable’. We must provide project structures that are directly aligned to what needs to be delivered.

Recall that the content from Chapter 16 of Integrating Program Management and Systems Engineering (IPMSE) provided calls to action for several groups of stakeholders: academia, enterprise, policymakers, industry and professional societies, and researchers. These calls to action identify specific actions that stakeholders in each group should take. We are now seeing some positive outcomes from PMI with the impending release of the 7th edition of the PMBOK, but this has yet to be mirrored within the INCOSE publications. Also, there are many positive signs from Academia (particularly within Australia), but this has not yet materialized in any practical change.

The other groups that were called out in the previous paper from Issue 72 of PPI SyEN were CEOs and the general discipline practitioners. For CEOs we are seeing industry disrupters like Elon Musk highlighting the modern trends of financials over product – hopefully this resonates with many business leaders. The critical step that these change agents should take is to understand that their employees are operating largely on auto-pilot with similar training conditioning their thinking and actions. Fostering a culture where the outcome and realization of desired Capability is more important than forcing deliverables at milestones or exiting review gates to achieve an early payment milestone would be a tremendous step forward.

Finally for practitioners, we must discipline ourselves to not blindly follow a process and not assume that compliance and assurance is achieved through adherence to standards and processes. Understandably, this requires improved practice of both systems engineering and program management. We must understand the work that is needed to achieve the desired outcome, and develop or tailor standards and processes specifically to deliver that work. Recognize that other practitioners have also been conditioned to their own learned beliefs and that being confrontational to promote your personal conditioning will only add to the unproductive tension. Take the time and effort to adjust your language to describe why an event is occurring within the context of the outcome desired.

Obviously the change required to overcome our conditioning and to fully integrate systems engineering and program management will be difficult. As I have described, the needed change will require program managers and chief systems engineers leading complex programs to work differently. Our responsibility to everyone involved demands that we commit ourselves to necessary continuous improvement of our approach. I trust that the observations and suggestions provided in this paper will facilitate the needed journey.

References

Griffin, Martin. “Integrating Program Management and Systems Engineering”, Project Performance International Systems Engineering Newsletter (PPI SyEn) Issue 72, December 2018. Available here.

Hiatt, James. ADKAR: A Model for Change in Business, Government, and Our Community. Prosci Learning Center Publications, 2006.Joint Authorities Technical Review (JATR) – Boeing 737 MAX Flight Control System – Observations, Findings, and Recommendations, October 11, 2019

INCOSE UK Z-Guides available here.

Rebentisch, Eric. Integrating Program Management and Systems Engineering. Hoboken, New Jersey USA: John Wiley & Sons, Inc., 2017. Available here.

Roseke, Bernie. “The 4 Types of Project Organizational Structure”, August 16, 2019. Available here.

Steward, David (Parsons Brinckerhoff). INCOSE UK Rail Interest Group, January 21, 2009.

The Auditor General, Audit Report No.22 2013–14, Air Warfare Destroyer Program, 6th March 2014.

The Wall Street Journal. CEO Summit interview with Elon Musk, December 8, 2020. Available here.

Young, Ralph R. Article series addressing program managers and chief systems engineers integration. Victoria, Australia: Project Performance International Systems Engineering Newsletter (PPI SyEN) Issues 56 – 73, August 2017 through January 2019. Available here.

About the Author

Martin Griffin has over 25 years’ experience in engineering design and management, with 10 years specifically within the Maritime Defense sector and currently involved in agile software development. He has eight years’ experience as an Executive Engineering Manager and is a discipline specialist in Systems Integration Engineering, Maritime and Mechanical Engineering, RAM Analysis, Safety Cases, and Risk and Issue Management. Martin is experienced in the successful delivery of complex engineering systems and services within Technical Regulatory Frameworks in the defense sector.

Martin Griffin has over 25 years’ experience in engineering design and management, with 10 years specifically within the Maritime Defense sector and currently involved in agile software development. He has eight years’ experience as an Executive Engineering Manager and is a discipline specialist in Systems Integration Engineering, Maritime and Mechanical Engineering, RAM Analysis, Safety Cases, and Risk and Issue Management. Martin is experienced in the successful delivery of complex engineering systems and services within Technical Regulatory Frameworks in the defense sector.

Martin has worked in project management capacities as well as engineering management and has led organizational change initiatives to maximize the effectiveness of delivery. Through the 2009 Defense Strategic Reform Program, he molded a functionally based engineering group into a LEAN project delivery team operating across individually tailored Systems Engineering upgrades, to halve the cost base of the organization. More recently, he led the restructuring of engineering disciplines into multi-domain delivery cells that are able to operate across multiple support contracts utilizing unique system engineering process models.

1See www.sesa.org.au ↑

2Martin Griffin, “Integrating Program Management and Systems Engineering”, Project Performance International Systems Engineering Newsletter (PPI SyEn) Issue 72, December 2018. Available here. ↑

3PRINCE2, or PRojects In Controlled Environments, is a popular project management methodology used in over 150 countries. It’s a process-based approach that focuses on organization and control throughout the entire project, from start to finish (Wikipedia). ↑

4The PMI-INCOSE-MIT collaboration was one of several actions taken in response to the research finding that unproductive tension exists between PMs and SEs. See Rebentisch [ ], p. xiviii for a discussion of the origins of this collaboration. ↑

5See “What is the ADKAR Model” for a description of this goal-oriented change management model that guides individual and organizational change – available here. ↑

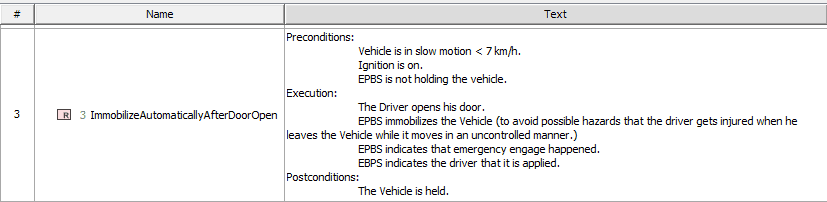

2.2 A Practical Example of the Process to Define a System Functional Architecture

by

Alfonso Garcia and Jose L. Fernandez

Emails: alfgarciacasado@gmail.com, joselfernandez@telefonica.net

Independent Consultants

January 9, 2021

Abstract

One of the most important activities that system engineers must perform is to understand what the system must do to deliver the expected value to its stakeholders. Doing so by creating the system functional architecture has numerous benefits that facilitates the subsequent phases of the system development. In this paper, we demonstrate how to apply the ISE&PPOOA MBSE methodology to create a consistent functional architecture of an Electric Park Brake System using Cameo System Modeler™ with its SysML profile, taking advantage of the simulation capabilities of the tool.

Web site: http://www.omgwiki.org/MBSE/doku.php?id=mbse:ppooa

Copyright © 2021 by A. Garcia and J.L. Fernandez. All rights reserved.

Introduction

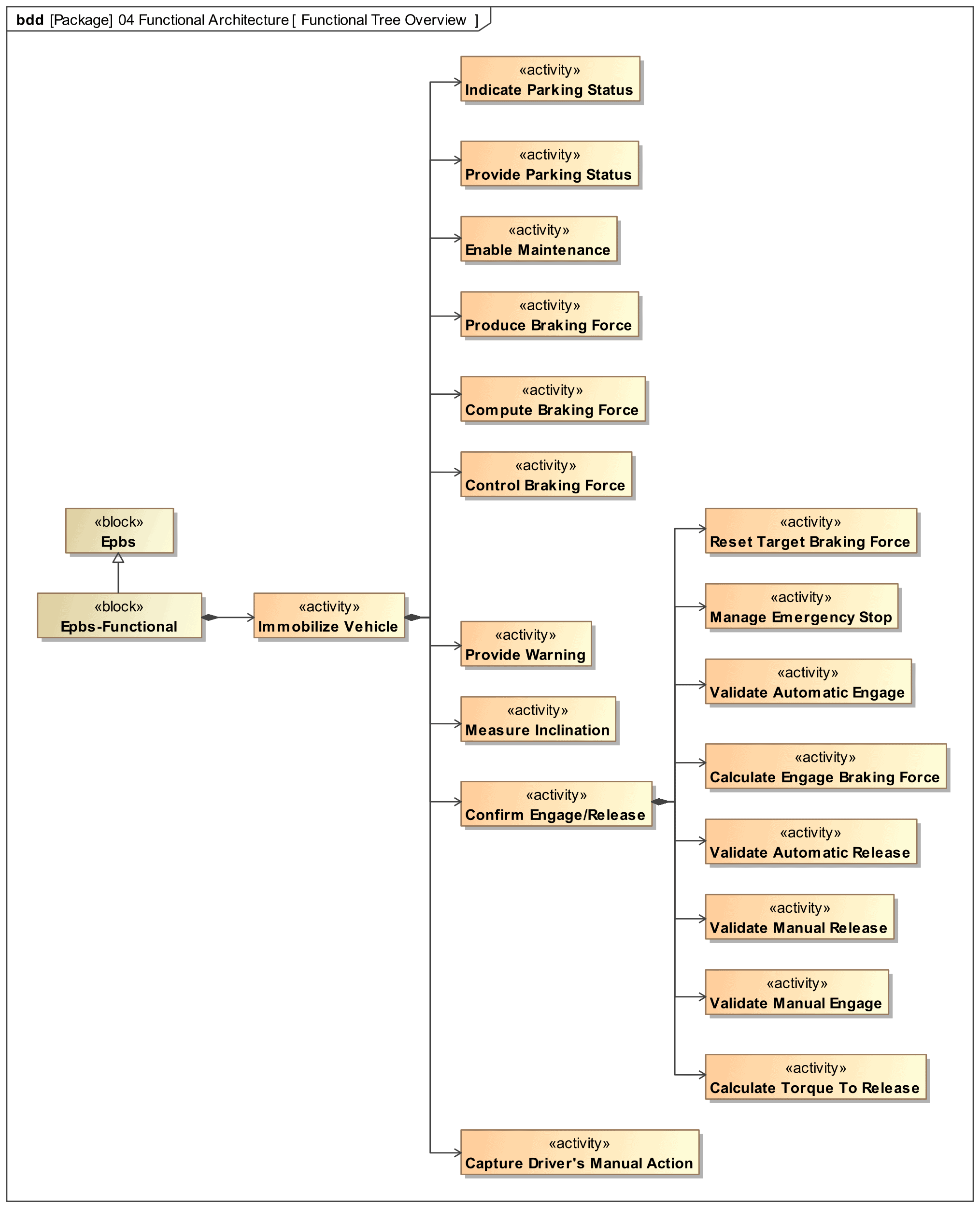

The functional architecture of a system represents the decomposition of its functions and the interactions between these functions representing flows of behavior. It is the result of the functional analysis and it is at the core of the system architecture modelling activities [1], as the functional architecture represents the main definition of the problem, i.e. “what the system does that delivers value”.

One of the main advantages of defining the functional architecture is that it is independent of the technical solution at the highest levels of its hierarchy. That helps us avoid constraining the design solution space too soon during the early stages of the development, and it also helps minimize impact of changes during the design stage as the physical solution evolves as technology is applied to the system.

The functional architecture is depicted by diverse diagrams supporting the different views applied to the functional architecture model. The main views of the functional architecture are those describing the functional hierarchy, the functional interfaces, and the functional flows.

In this article we show how to apply the ISE&PPOOA MBSE methodology [2] to define the functional architecture of an Electric Park Brake System (EPBS) using the Cameo Systems Modeler tool out of the box, without adding any other profile but the standard SysML.

Essentially, an EPBS has the same responsibility like a traditional mechanical handbrake: to hold the car firm in place once it is parked. However, when the driver uses a mechanical handbrake, she must control the retaining force to be produced by the handbrake with the only help of her senses and intuition. Conversely, adding electronic control provides numerous benefits to the driver that facilitates her driving experience, comfort, and increases safety.

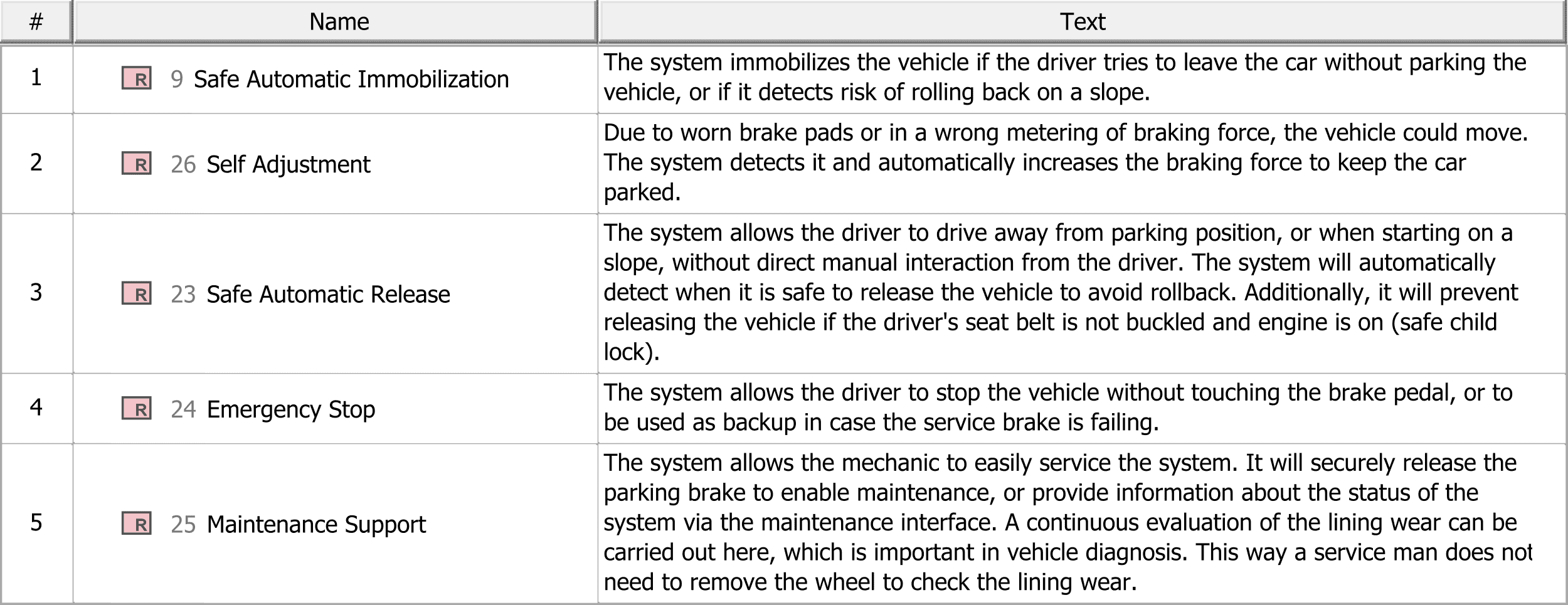

When the car is stationary and the EPBS is activated, this system calculates the needed retention force based on the slope inclination, and it will re-adjust the produced force to keep the car in place, as the temperature changes or as the brake pads wear along their lifecycle. Additionally, the system assists the driver when starting on a ramp, preventing the car to roll back. In case the driver leaves without parking the vehicle, the system detects it and immediately immobilizes the vehicle. Conversely, the EPBS cannot be released if the engine is on and the driver’s seatbelt is not buckled (i.e. child safety lock). Finally, this system provides emergency brake function to stop the vehicle when in motion and commanded by the driver.

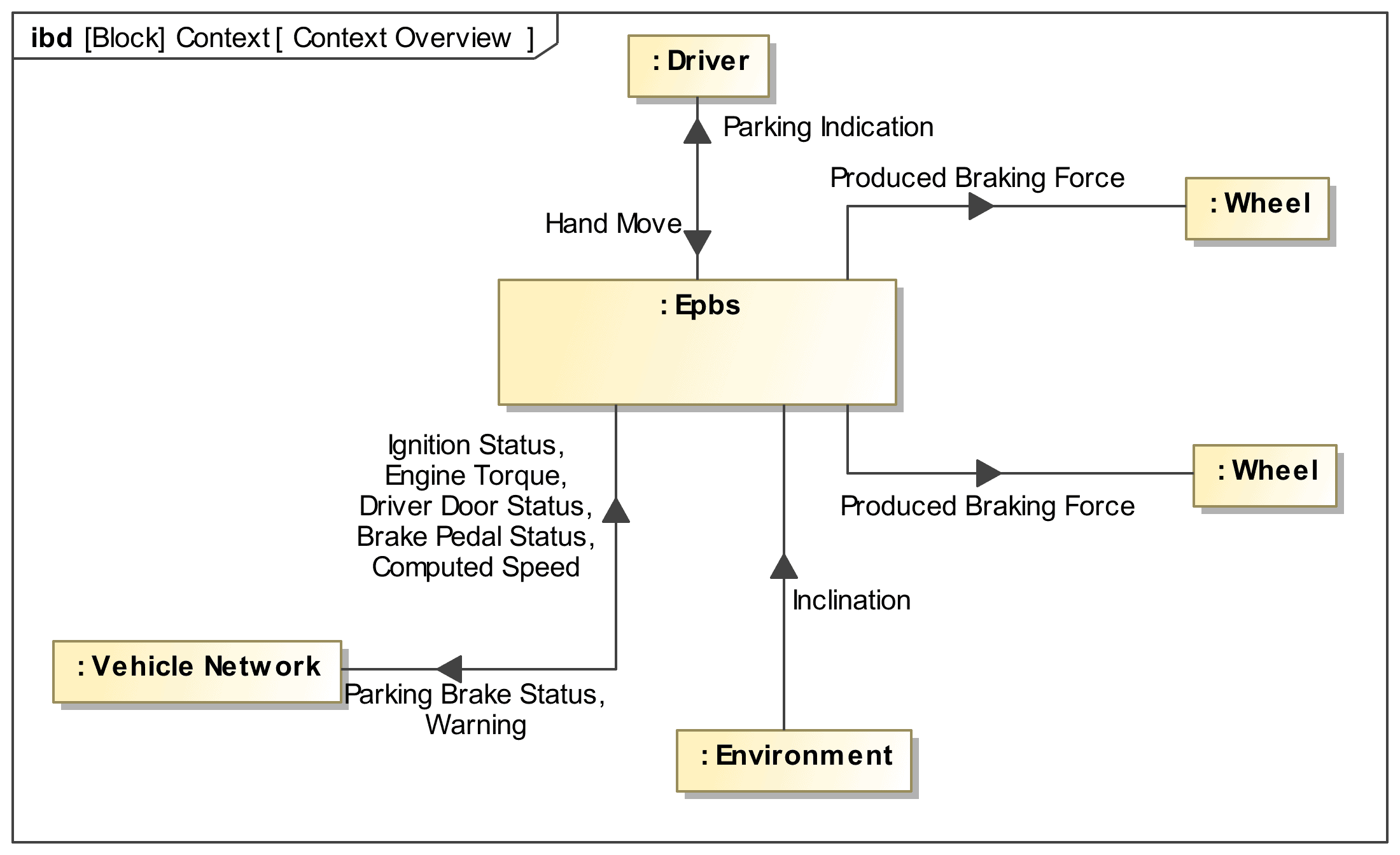

Figure 12. Context Diagram of the Electric Park Brake (EPB) system.

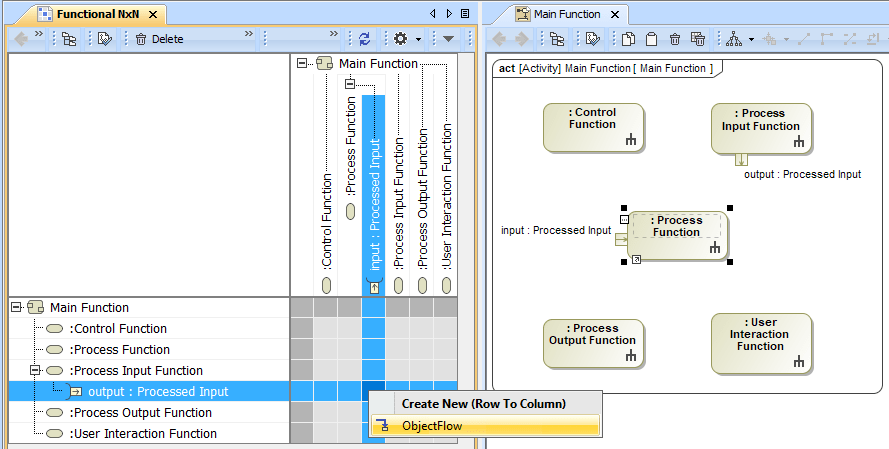

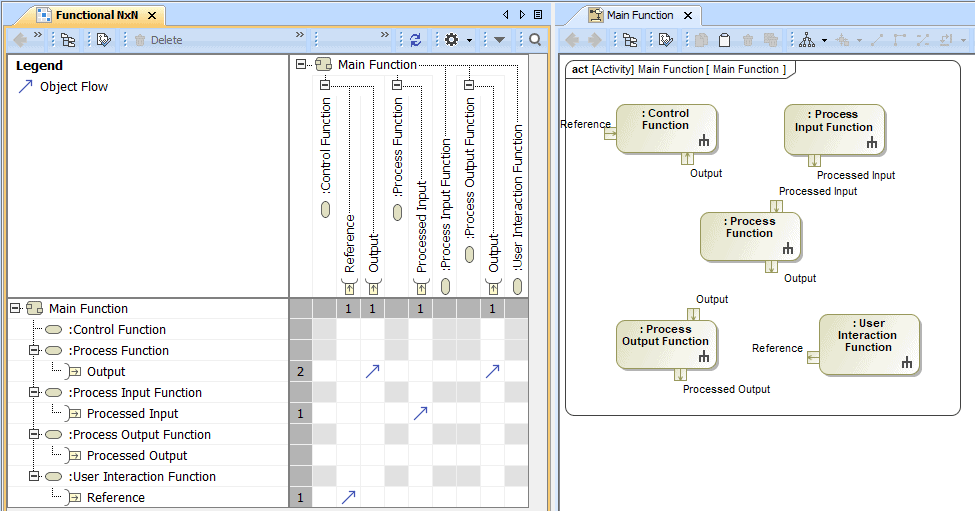

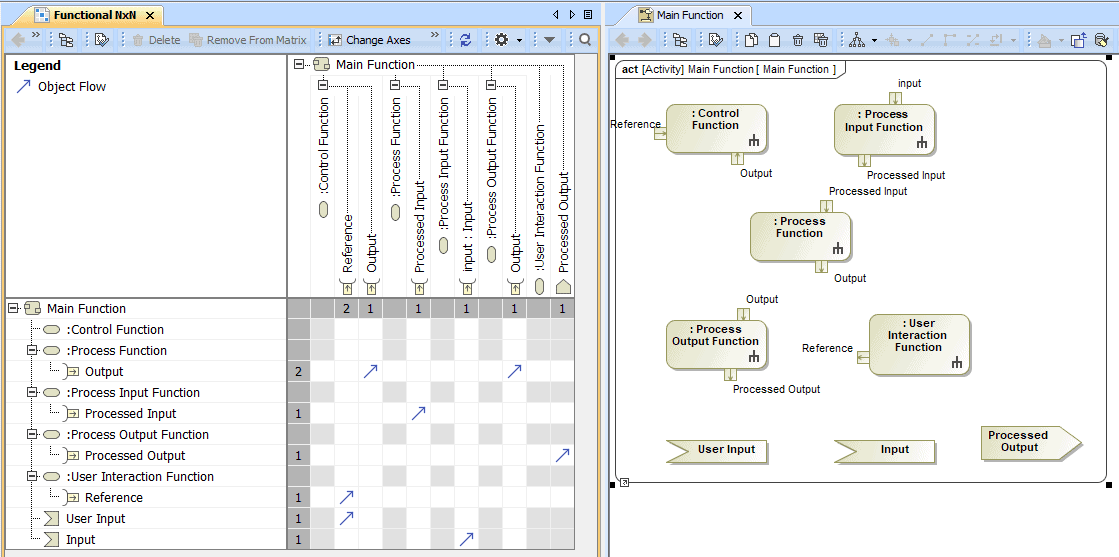

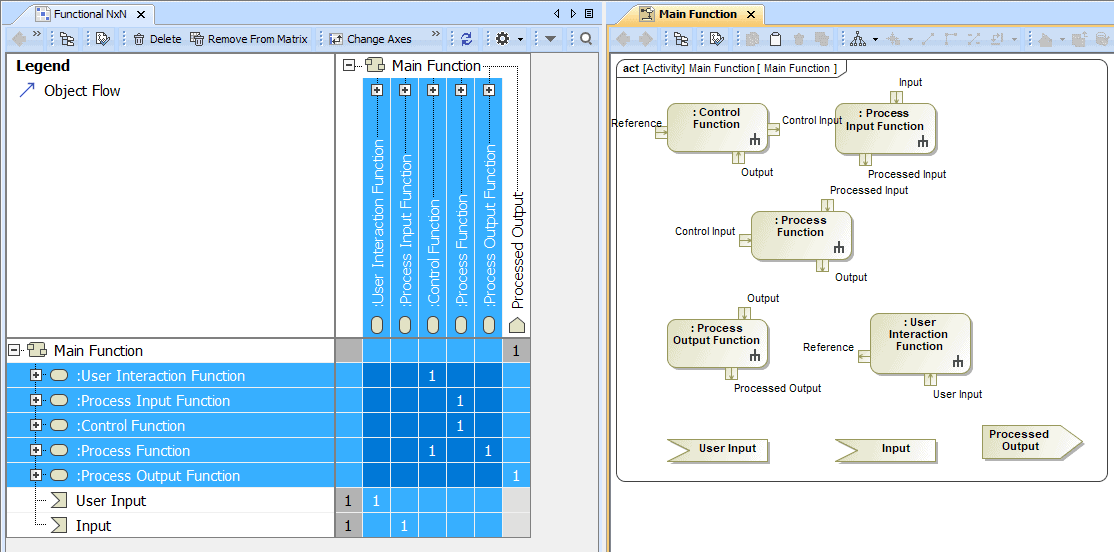

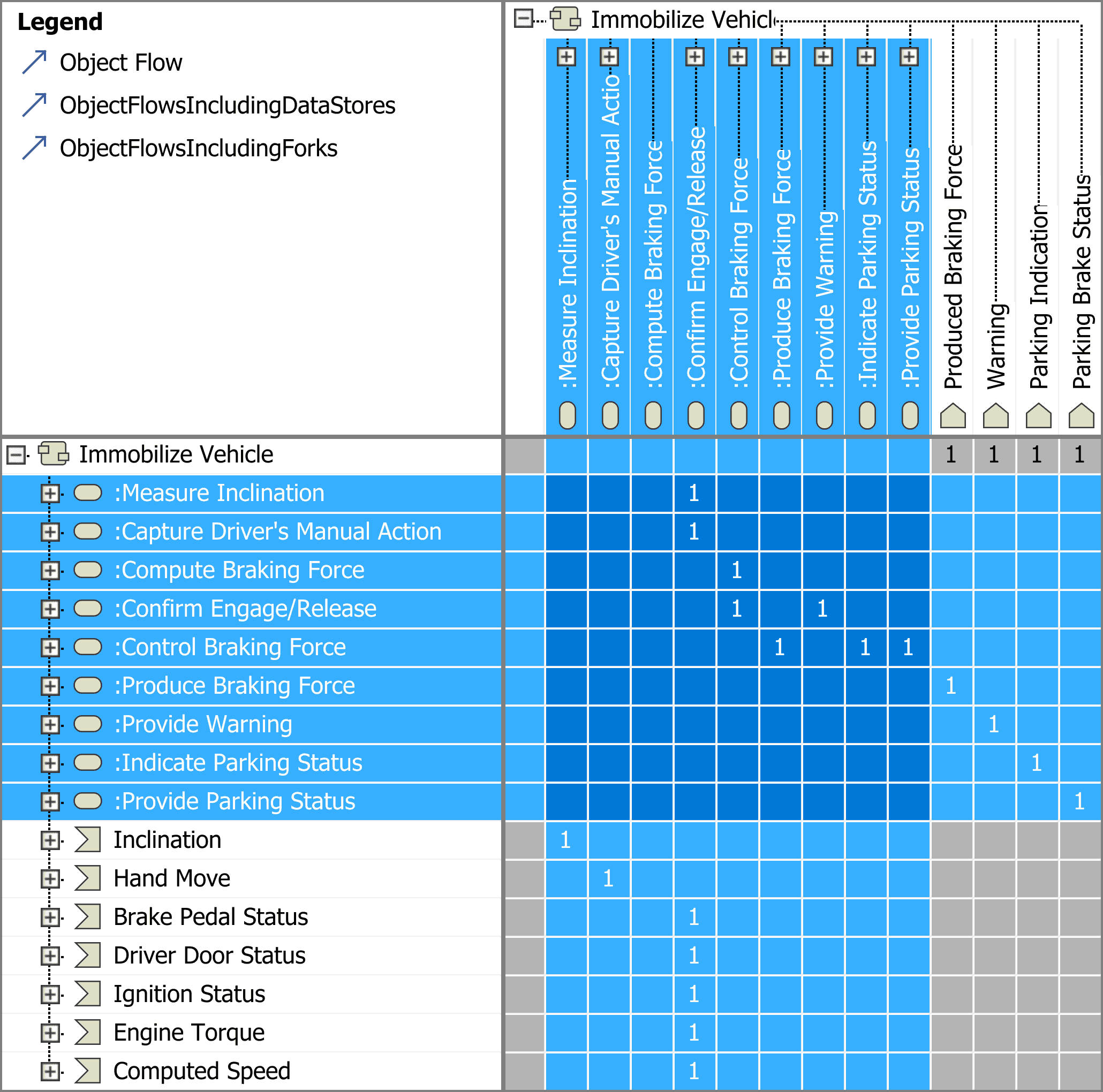

The main benefit of the methodology + tool approach presented here is that the main functional flows are seamlessly obtained from the functional interfaces represented schematically by N² charts for the functions identified and represented by SysML BDD hierarchies.

The Process

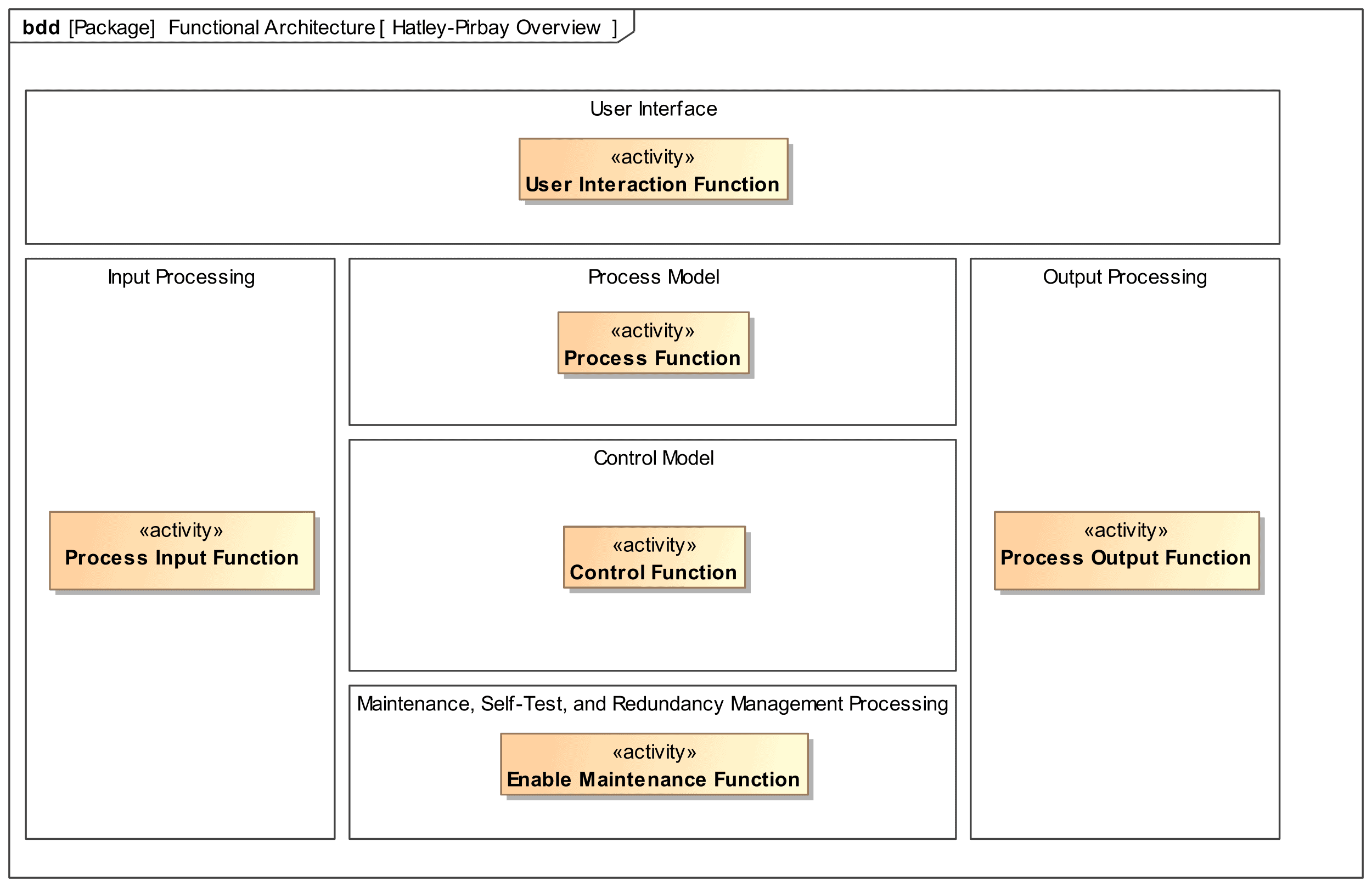

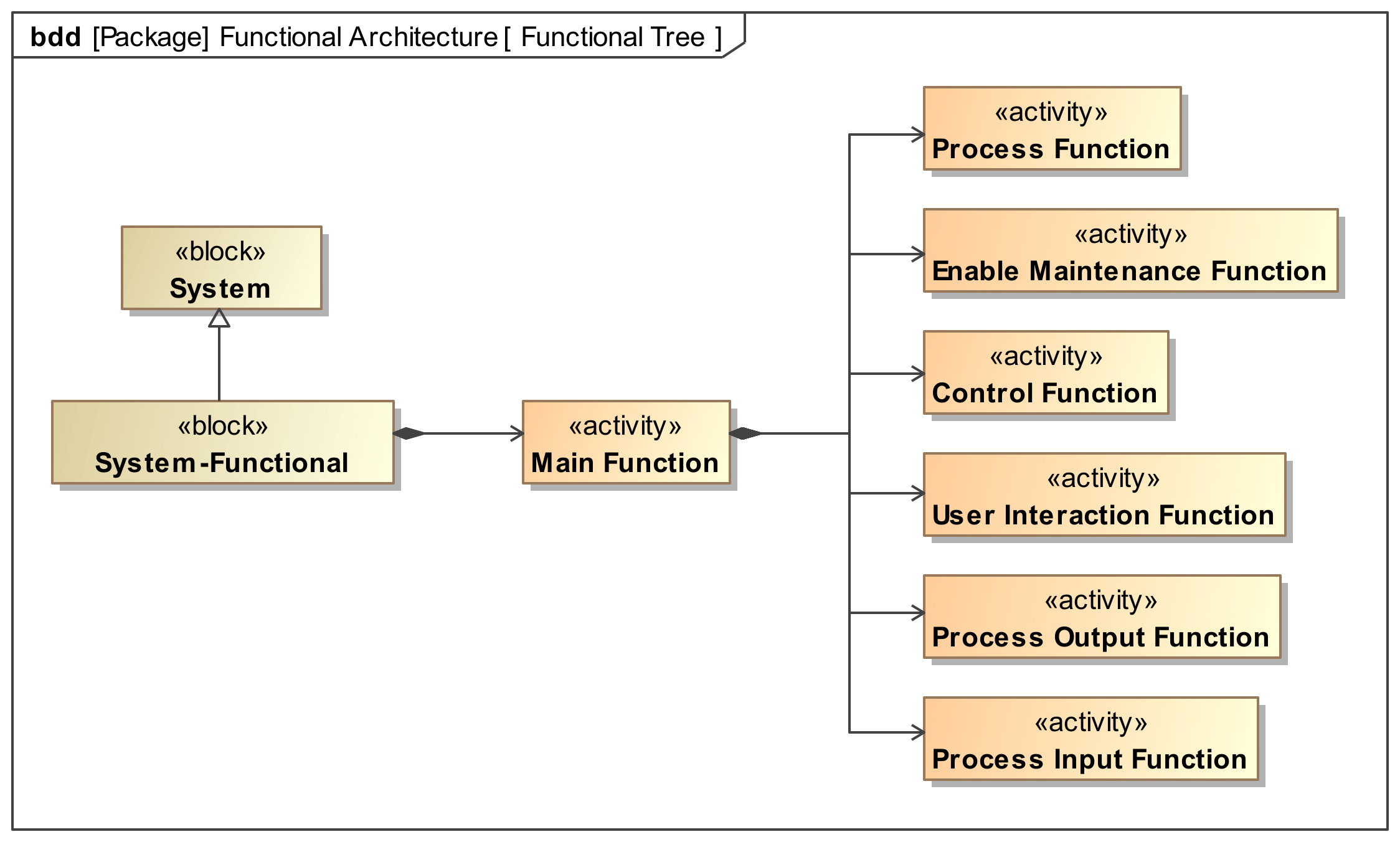

ISE&PPOOA is a requirements-driven system engineering methodology that integrates model-based systems and software engineering approaches for the development of complex products. The ISE&PPOOA methodological process can be envisioned as the assembly of the three dimensions: mission, system, and software. Each dimension has associated project deliverables, mainly models but complemented with textual and tabular representations [2].

The mission and systems engineering dimensions of the ISE&PPOOA process are described briefly here. The mission dimension represents the system context, system scenarios, operational needs and capabilities related to the mission. An important result, besides requirements, of the systems engineering dimension of the ISE&PPOOA methodological process is the creation of the functional and physical architectures outcomes, identifying the constituent subsystems and their interfaces. The system may have subsystems that are software intensive and/or non-software intensive where physics conservation laws of mass, energy, and momentum are an important issue that should be considered when representing the system views.

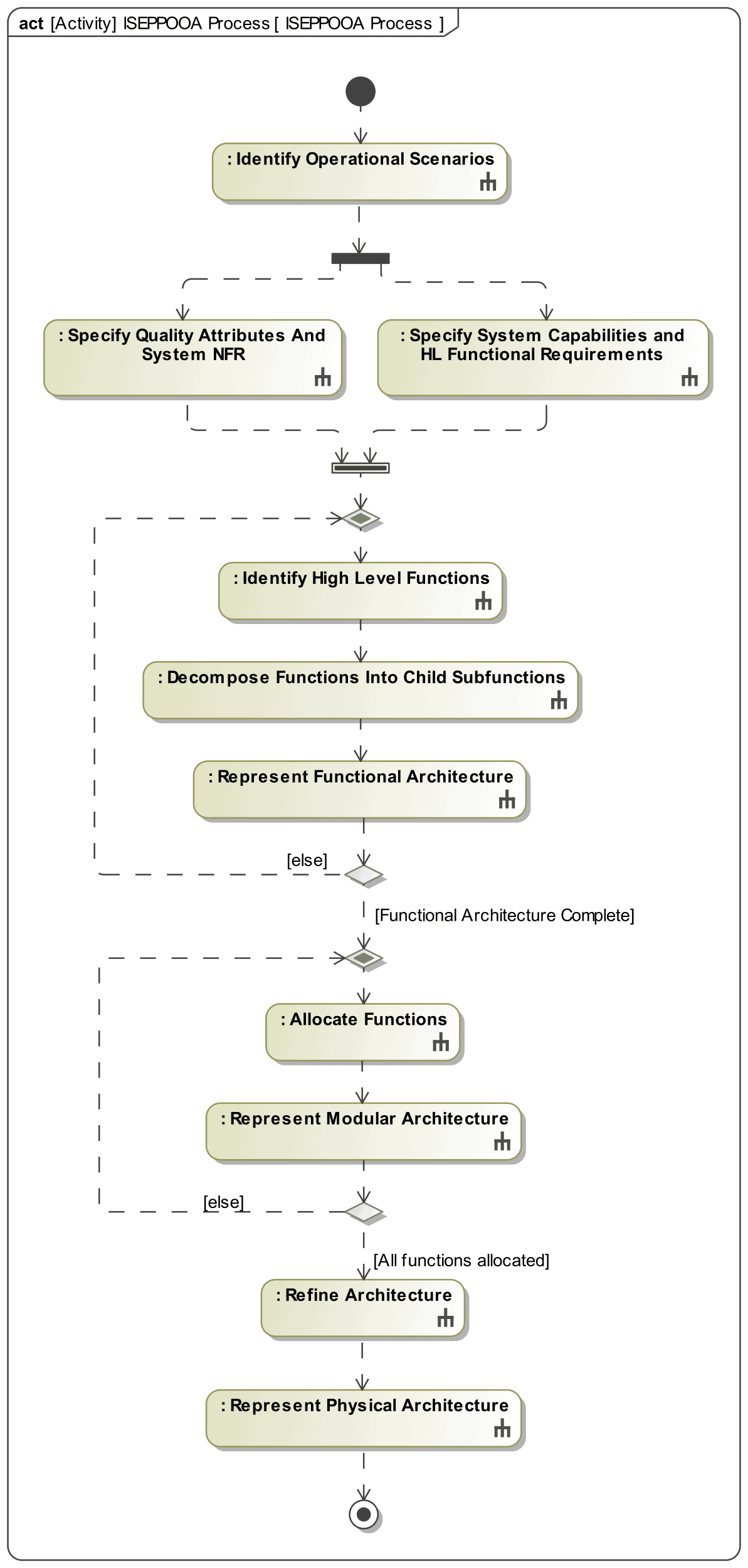

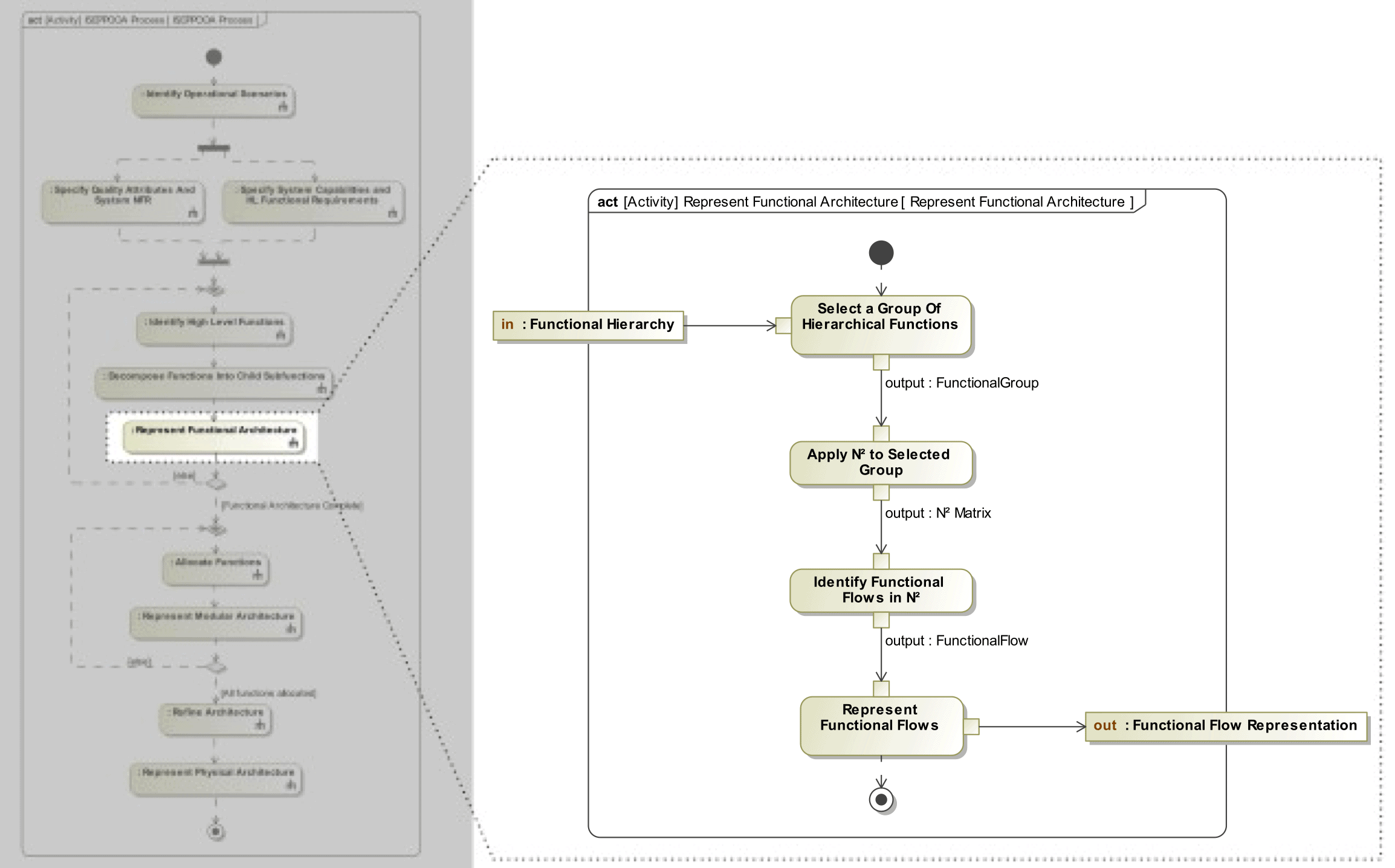

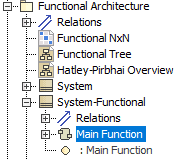

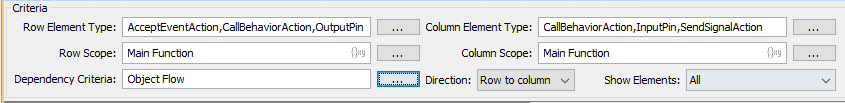

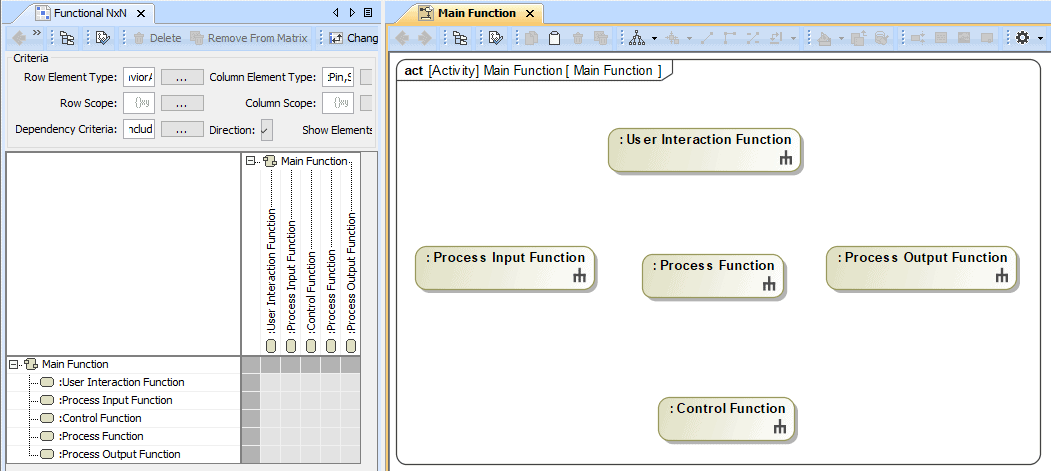

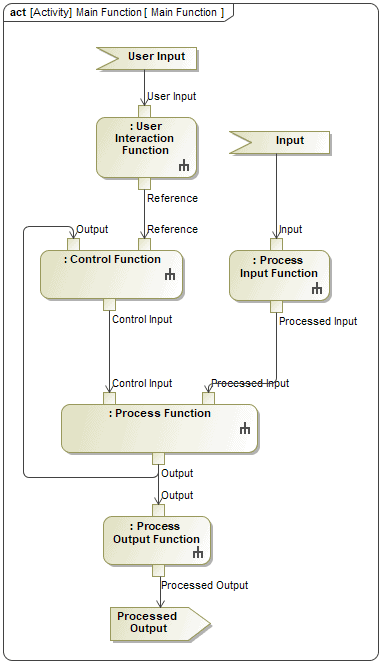

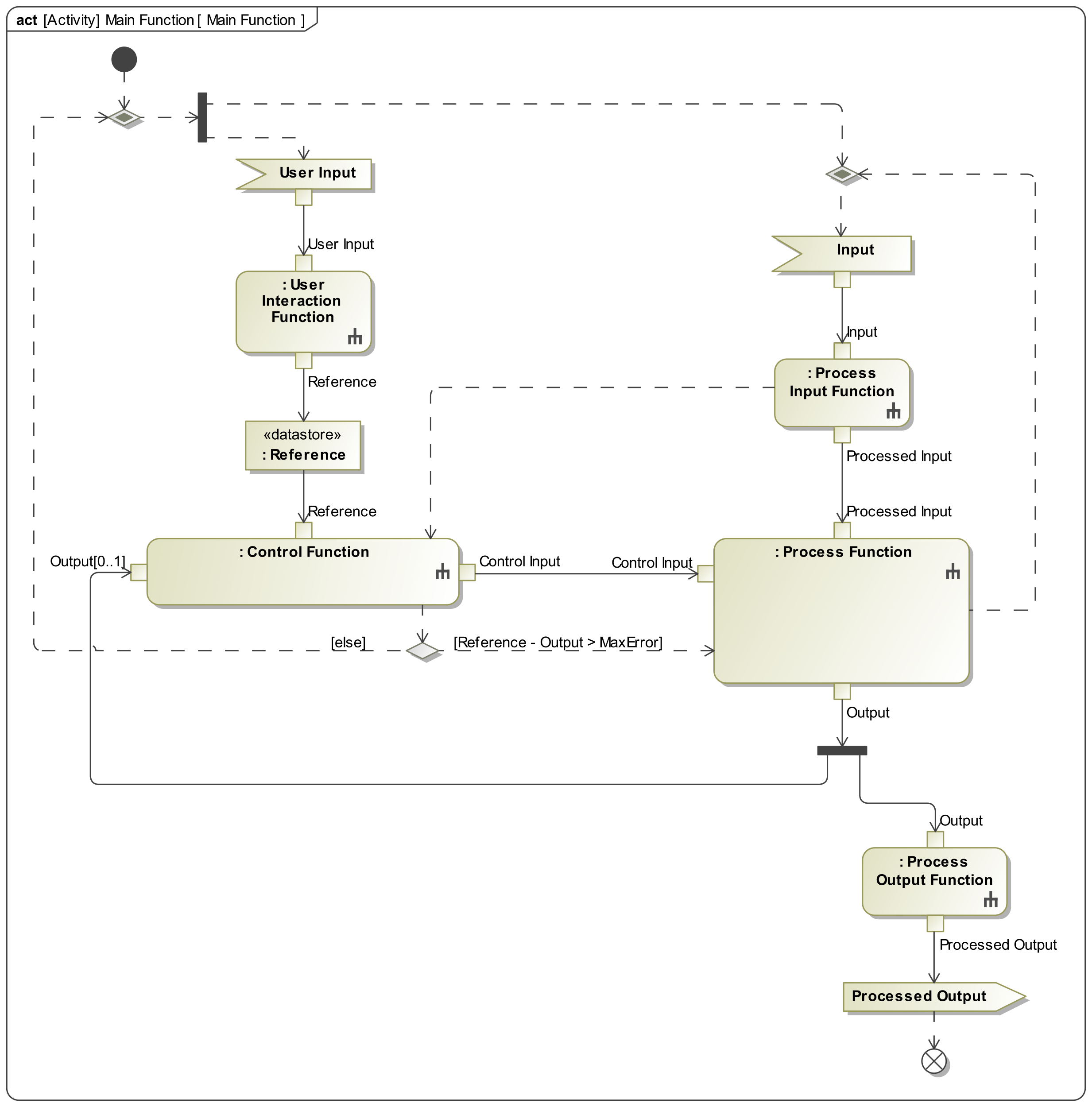

The methodological process presented in Figure 13 has four groups of steps that are performed sequentially for groups 1, 2, 3, and 4. Step 2 is split into two parallel or concurrent steps. Step groups 3 and 4 are executed iteratively [2].

Figure 13. Overview of the ISE&PPOOA Process

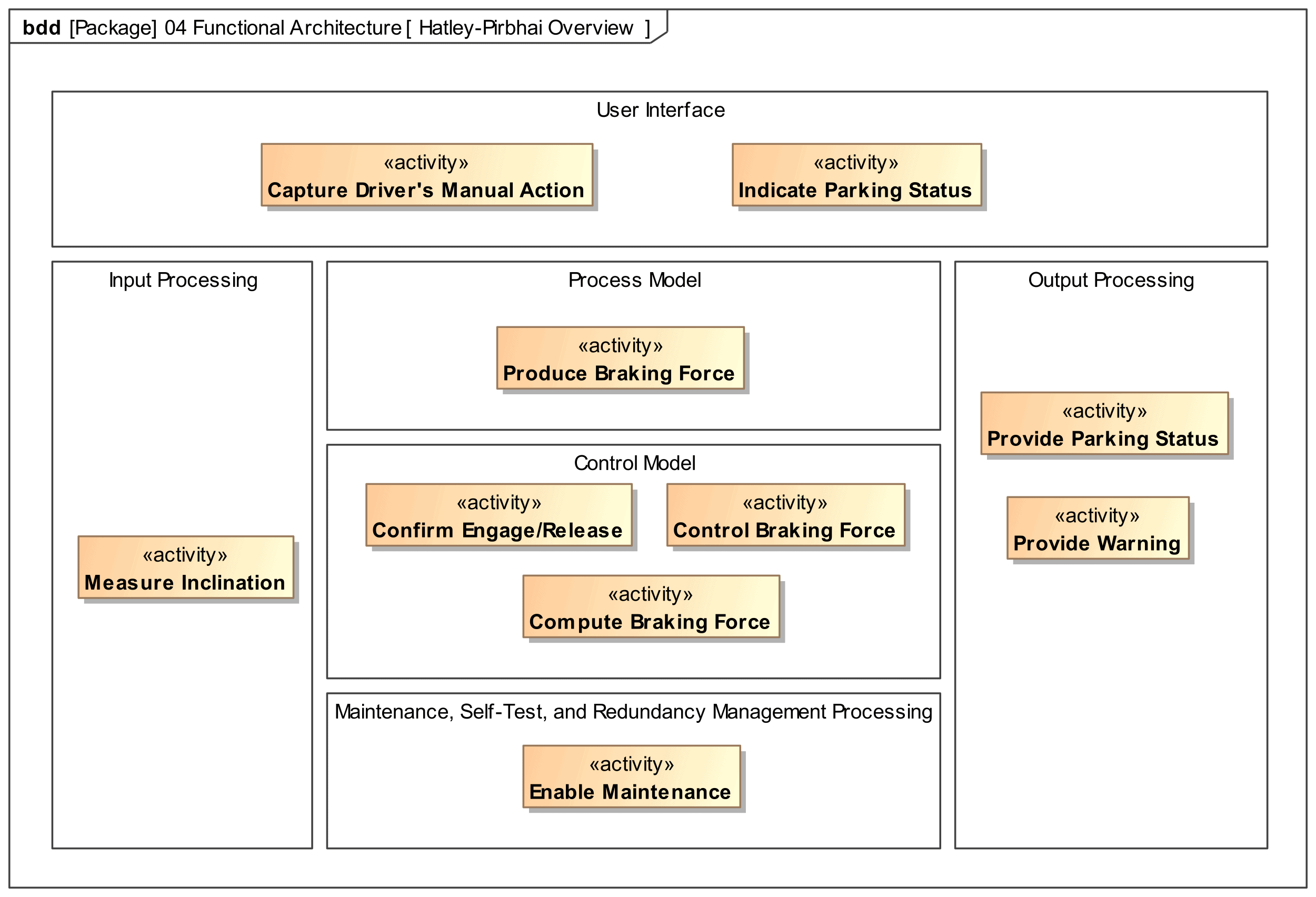

Create the Functional Architecture

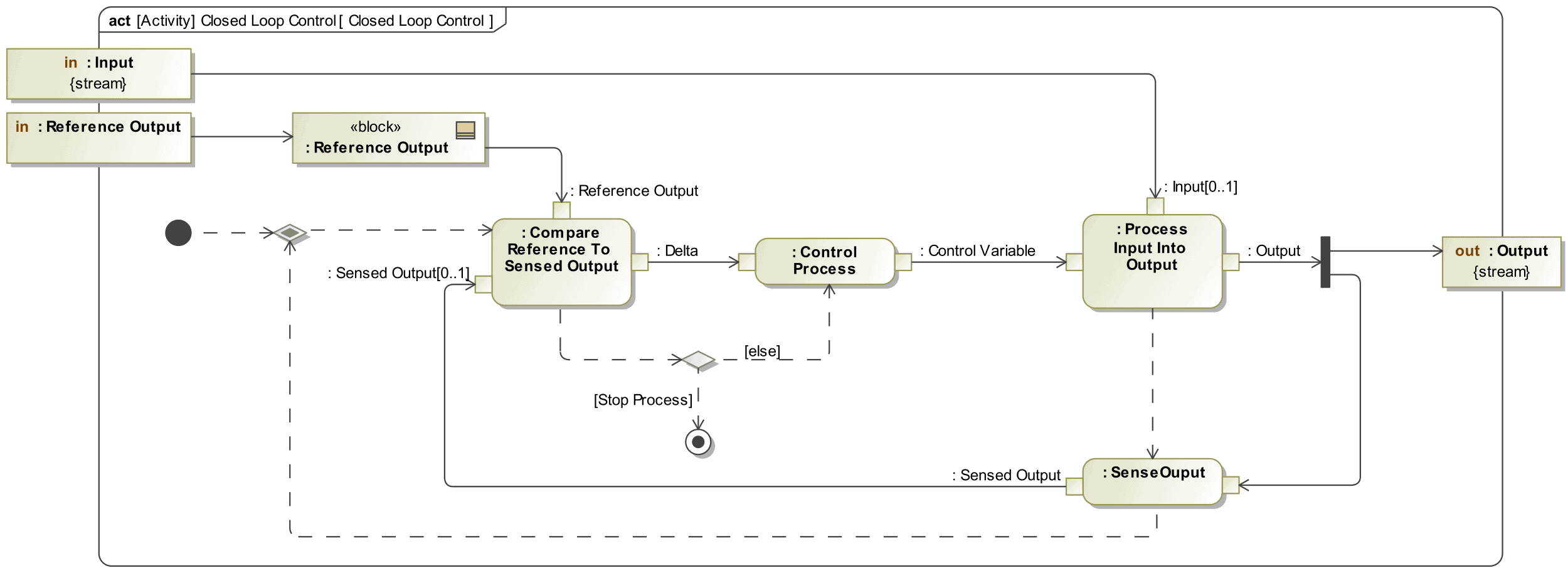

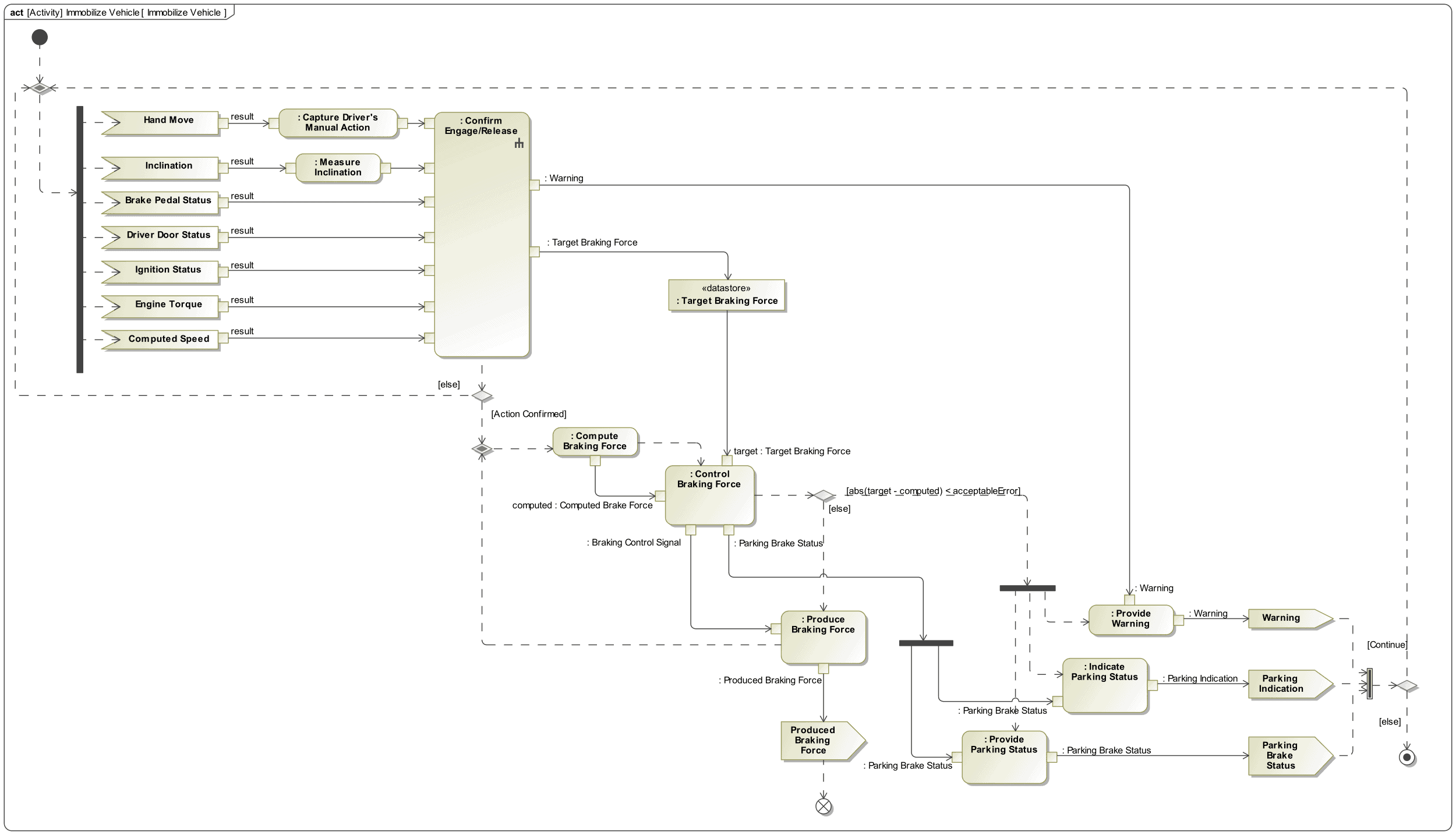

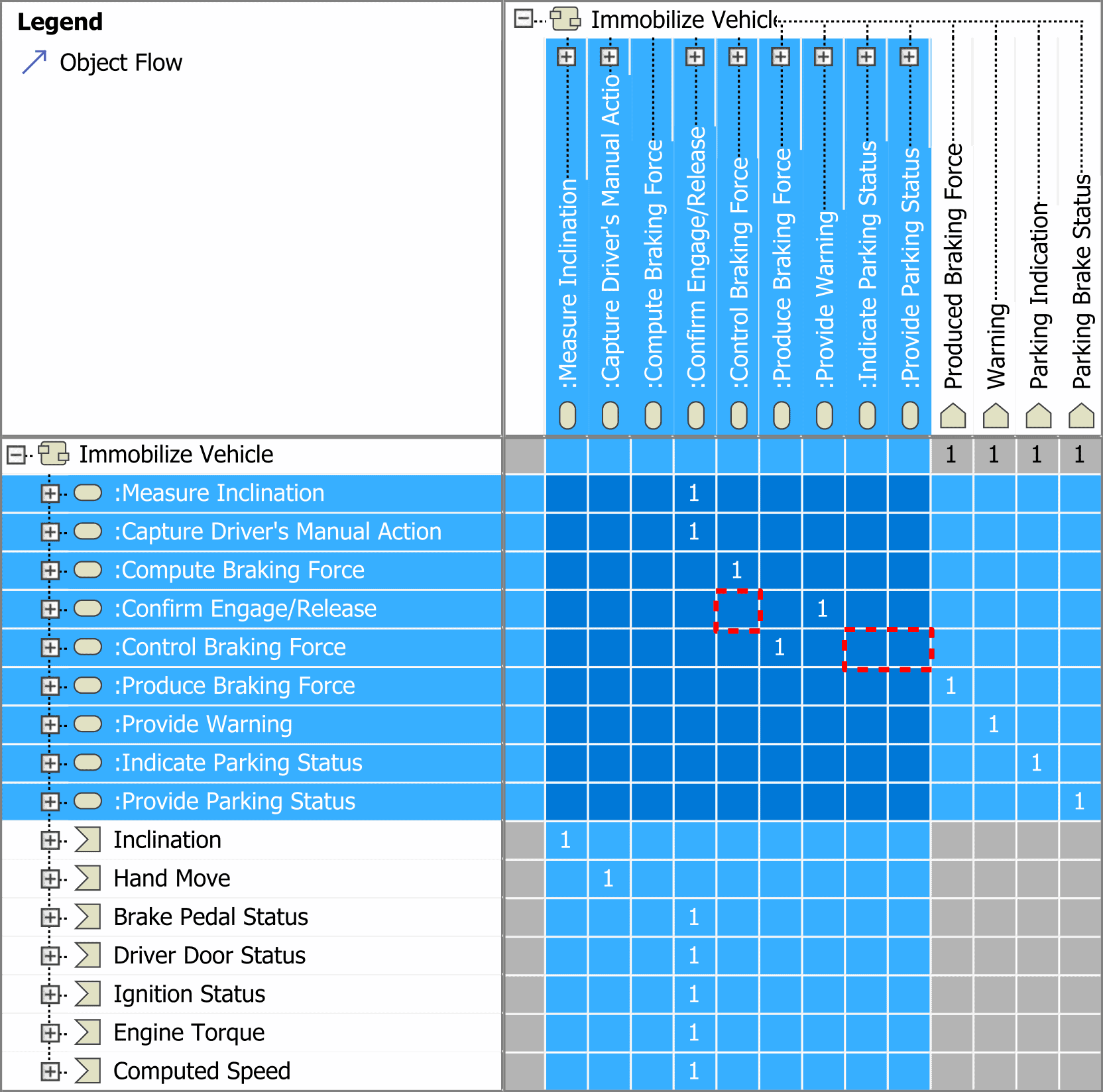

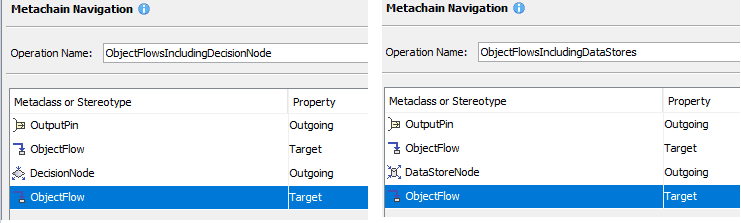

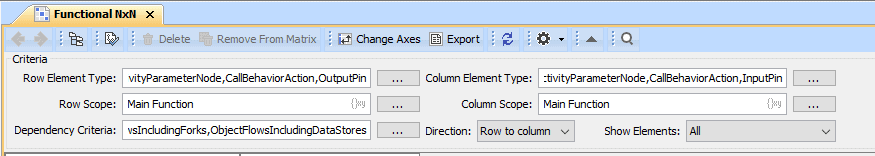

Once operational scenarios, capabilities, quality attributes and high-level requirements (functional and non-functional) have been identified, the next step is to build the functional architecture. The process, shown in Figure 13, is an iterative process where:

- the functional hierarchy is defined using SysML block definition diagrams (BDD),

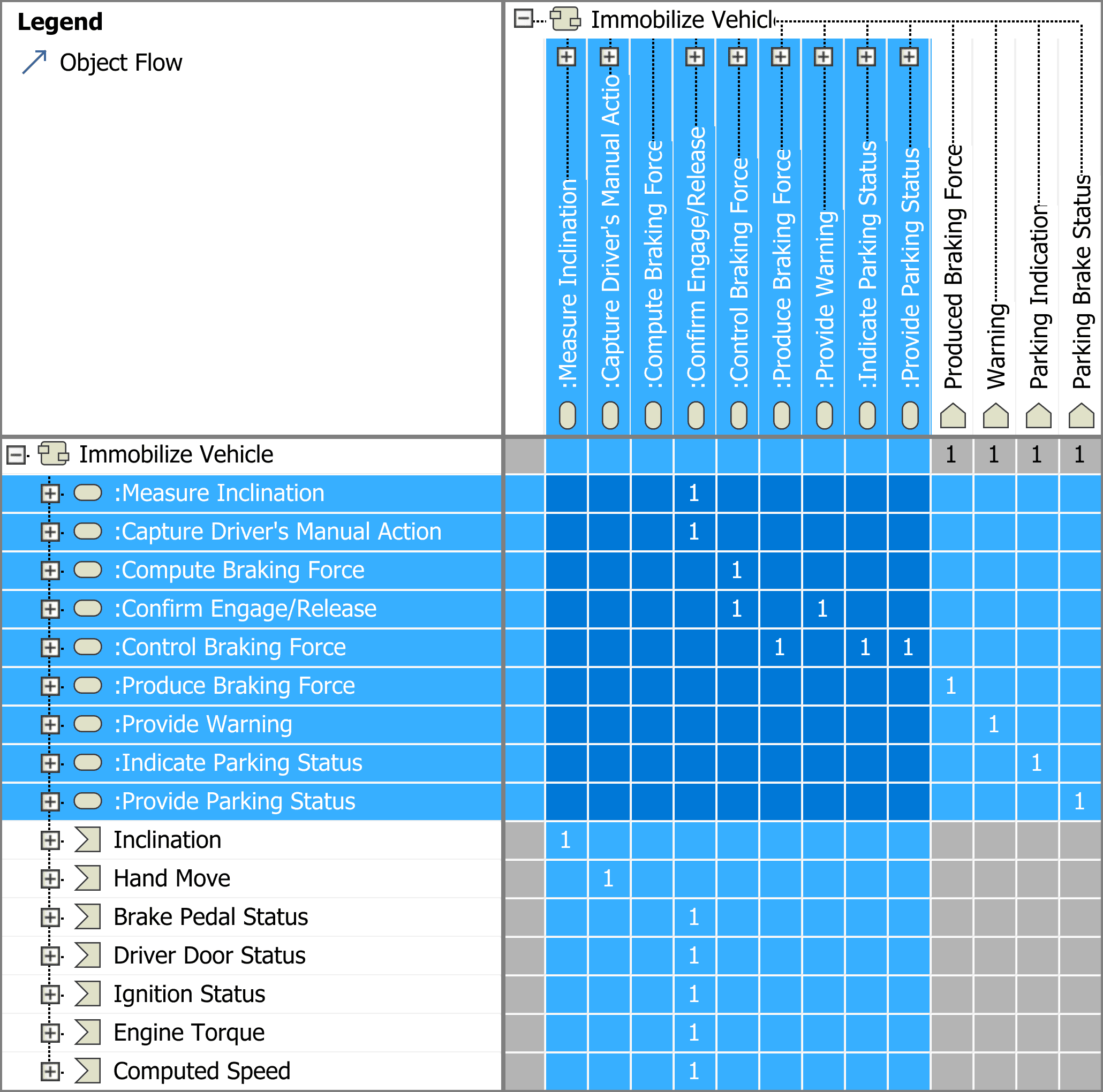

- the main functional interfaces are identified and represented by N² charts [3], where matter, energy or information items that are moved between functional pairs are shown,

- the main functional flows are identified and represented by SysML activity diagrams [4] using the previously defined functional hierarchy and interfaces as inputs.

As a result, we will have the representation of all needed functions to satisfy the system requirements, the dependencies between these functions and how they must interact to provide the required behavior.

Define the Functional Hierarchy

The functional hierarchy is a representation of the decomposition of the top-level function/s of the system into their children to a level of detail that allows us to identify elements of form that will be responsible to provide the allocated functions, all inputs to the system are transformed into outputs, and all operational scenarios are covered.

A function is considered in ISE&PPOOA as a transformation of input flows into output flows (or their state), according to the action implied in its name. These input/output flows can be material, energy, or data. For example, a motor converts (transformation) electric energy (input) into rotational energy (output), an elevator transports (transformation) persons (both input and output flow) vertically from one floor to another within a building, or a brake pedal converts feet force (input flow) into brake demand signal (output flow).

The usual naming convention for a function emphasizes the transformation and the outcome that deliver value in the form of “action verb + object” [5] or “process + operand” [6]. That is consistent with the definition of function proposed by INCOSE [7]: “a characteristic task, action, or activity that must be performed to achieve a desired outcome.”

To define the functional hierarchy, it is highly recommended to use both top-down and bottom-up approaches. It must be highlighted that the identification of these top-level functions and their decomposition is not a trivial task at all. On the contrary, it requires a great deal of domain knowledge and abstract thinking. Thus, the systems engineer must request support from other engineers and stakeholders to validate, identify gaps and inconsistencies and help propose new or alternative functionality.

Top-down Approach

When using the top-down approach, we use the system capabilities and high-level functional requirements, defined in the previous steps of the methodological process, to identify high-level functions.