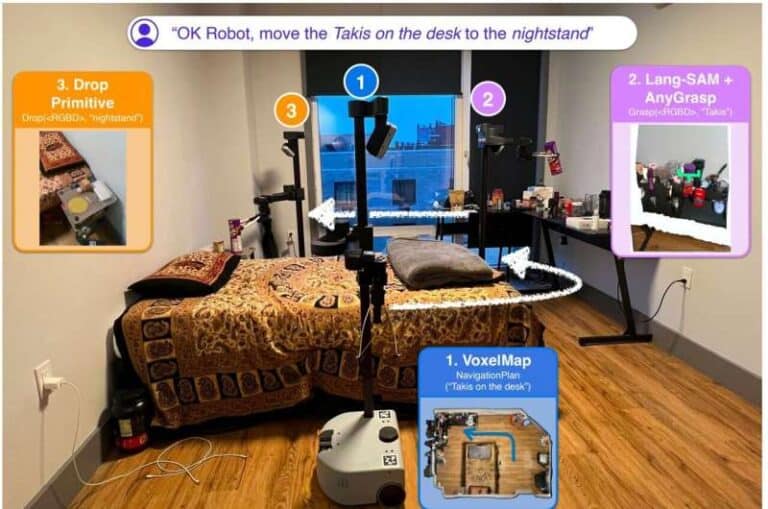

OK-Robot: Home 2 (Video credit: Nur Muhammad Shafiullah)

A team of innovative roboticists from New York University, in collaboration with AI specialists from Meta, has unveiled a groundbreaking advancement in robotics: the development of a highly versatile robot capable of intelligently navigating unfamiliar environments and executing complex object manipulation tasks. Their findings, detailed in a paper published on the arXiv preprint server, showcase the integration of visual language models (VLMs) with sophisticated robotic capabilities, marking a significant milestone in the field of robotics.

The researchers highlight the significant progress made in VLMs over recent years, particularly in object recognition based on language prompts. Additionally, advancements in robotic skills, such as grasping and manipulation, have contributed to the development of more capable robots. However, prior to this study, little effort had been made to combine VLMs with skilled robots.

For this pioneering research, the team leveraged a robot provided by Hello Robot, equipped with wheels, a pole, and retractable arms featuring claspers for hands. Affectionately named OK-Robot, this innovative platform was integrated with a previously trained VLM, enabling it to comprehend and respond to verbal commands related to object manipulation tasks.

To evaluate OK-Robot’s performance, the researchers conducted experiments in ten volunteer homes, capturing 3D videos of the environments using an iPhone. These videos served as crucial input data, allowing OK-Robot to familiarize itself with the layout of each home. Subsequently, OK-Robot was tasked with various moving assignments, such as relocating items from one location to another based on verbal instructions.

OK-Robot: Home 2 (Video credit: Nur Muhammad Shafiullah)

Remarkably, OK-Robot successfully completed 58% of the 170 assigned tasks, demonstrating its adaptability and competence in navigating and interacting within diverse real-world environments. Furthermore, by optimizing the workspace through decluttering, the success rate soared to an impressive 82%, highlighting the potential for further enhancements.

A key feature of the research is the utilization of a zero-shot algorithm, enabling OK-Robot to execute tasks without prior training in the specific environment. This approach underscores the feasibility and effectiveness of VLM-based robotic systems in real-world scenarios.

Looking ahead, the research team envisions refining OK-Robot’s capabilities through iterative improvements and exploring the potential benefits of employing more sophisticated robotic platforms. They emphasize that their work represents a significant step towards the development of advanced VLM-based robots with enhanced autonomy and versatility.

In conclusion, the groundbreaking research conducted by the NYU roboticists and their collaborators heralds a new era of intelligent automation, offering promising prospects for applications ranging from household assistance to industrial automation. As the field of robotics continues to evolve, innovations like OK-Robot pave the way for transformative advancements in human-robot interaction and intelligent systems.

For further insights into the research findings and the future of VLM-based robotics, refer to the published paper on the arXiv preprint server. Stay tuned for future developments as researchers continue to push the boundaries of robotic technology and artificial intelligence.

References

Bob, Yirka 2024, A robot that can pick up objects and drop them in a desired location in an unfamiliar house’, Tech Xplore, viewed 8th March 2024, <https://techxplore.com/news/2024-02-robot-desired-unfamiliar-house.html>